This is the multi-page printable view of this section. Click here to print.

Findy Agency Blog

- Autonomous LLMs Compromise Open Source Trust

- Rethinking SSI

- The Swiss Army Knife for the Agency Project, Part 3: Other GitHub Tools

- The Swiss Army Knife for the Agency Project, Part 2: Release Management with GitHub

- The Swiss Army Knife for the Agency Project, Part 1: GitHub Actions

- Issuing Chatbot

- I want mDL!

- Managing GitHub Branch Protections

- Path to Passwordless

- Test Coverage Beyond Unit Testing

- How To Write Modifiable & Readable Go Code

- How To Write Performant Go Code

- GopherCon UK 2023

- Beautiful State-Machines - FSM Part II

- The Agency Workshop

- The Time to Build SSI Capabilities Is Now

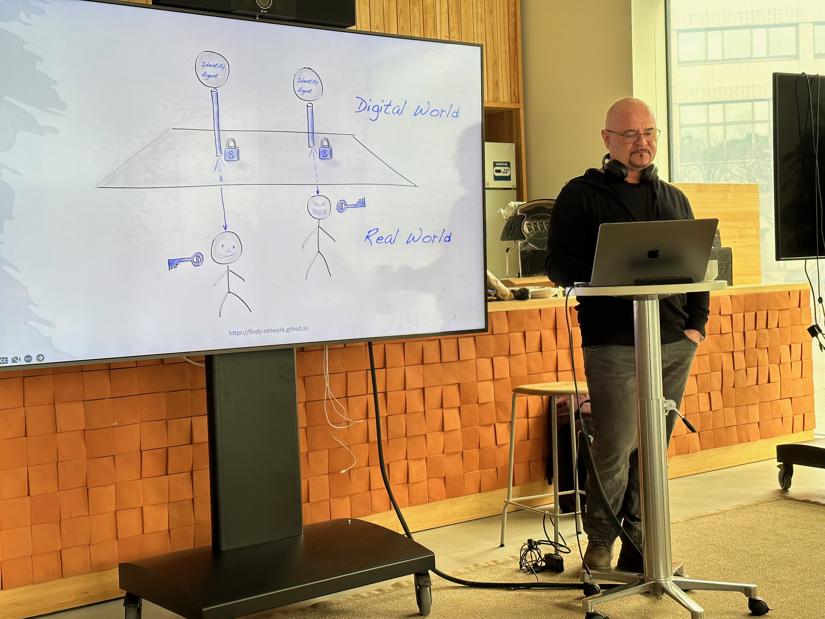

- Digital Identity Hack Part 3 – Introduction to SSI Presentation

- Digital Identity Hack Part 2 – Panel Discussion on Blockchain Projects

- Digital Identity Hack – Unlocking the Potential of SSI

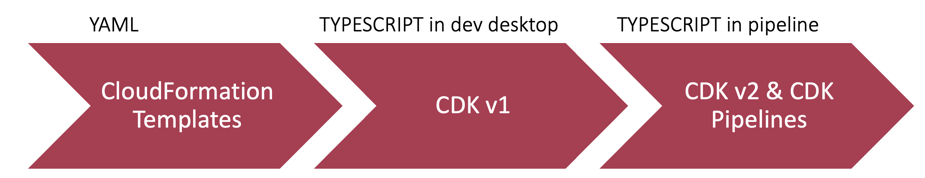

- Deploying with CDK Pipeline

- Agency's IaC Journey

- No-Code SSI Chatbots - FSM Part I

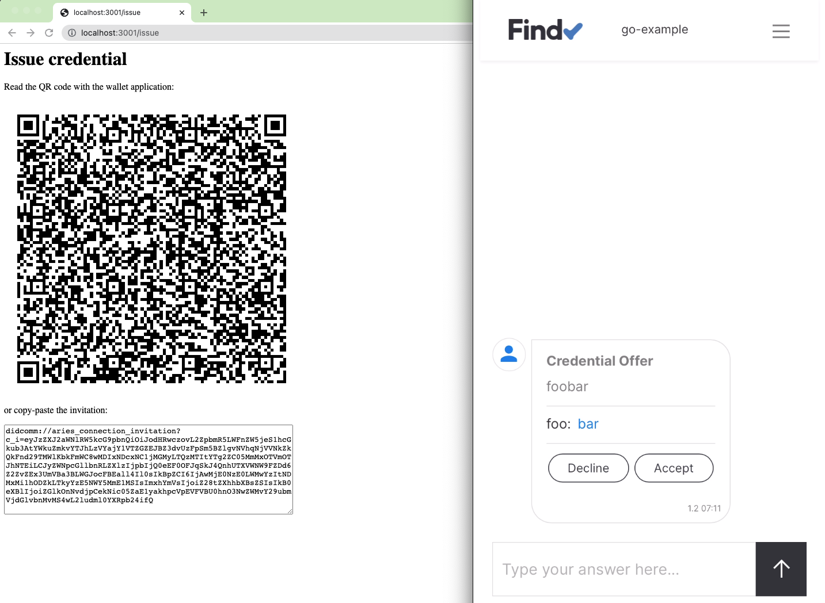

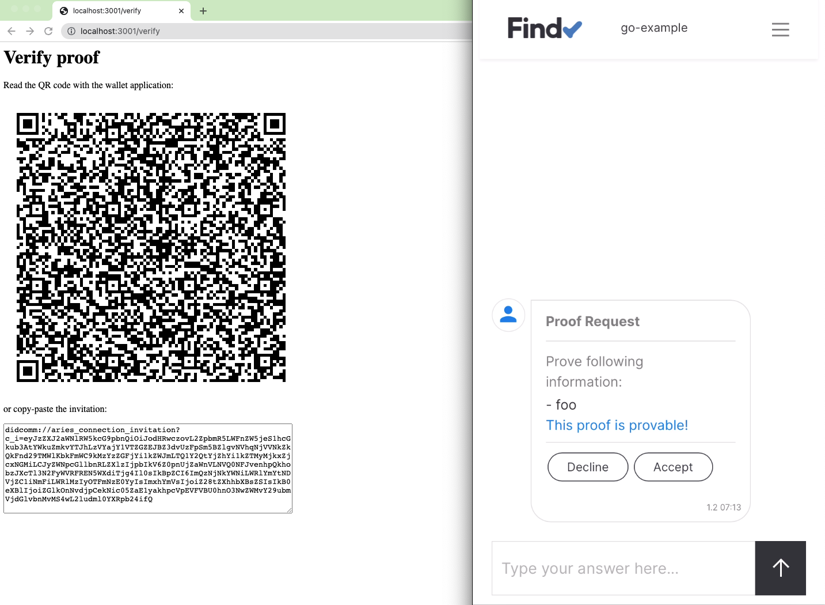

- How to Equip Your App with VC Superpowers

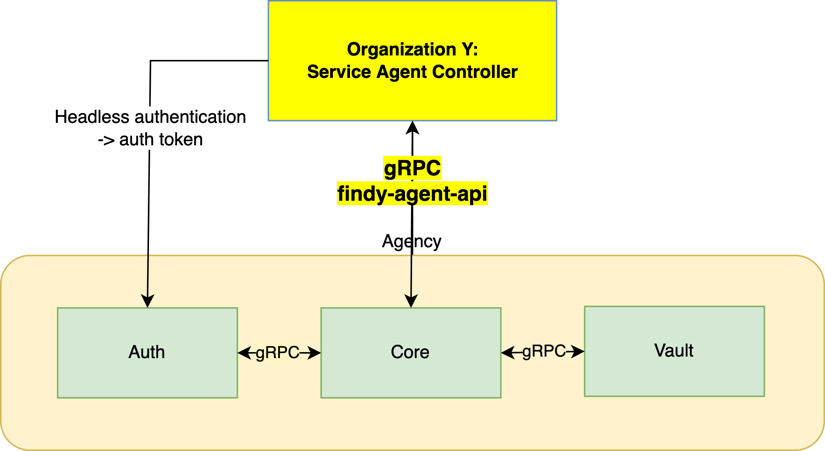

- Getting Started with SSI Service Agent Development

- Ledger Multiplexer

- The Hyperledger Global Forum Experience

- The Findy Agency API

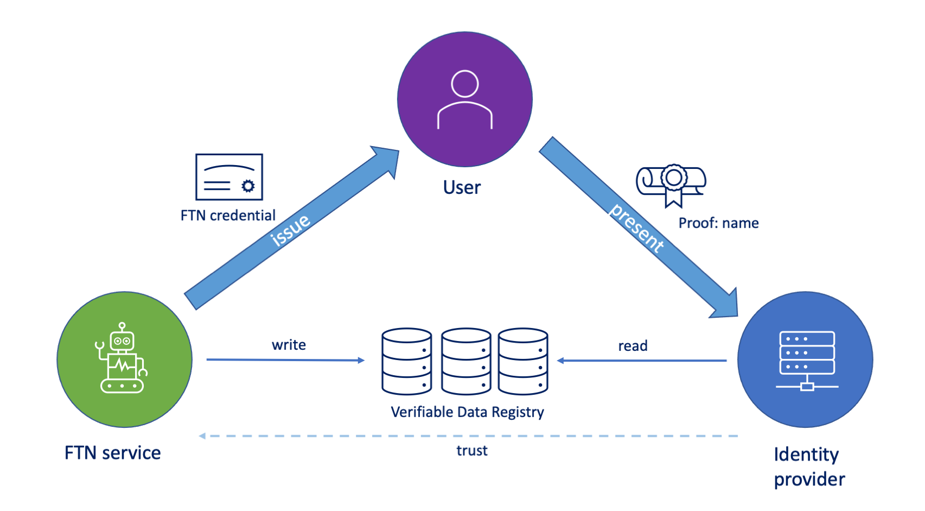

- Trust in Your Wallet

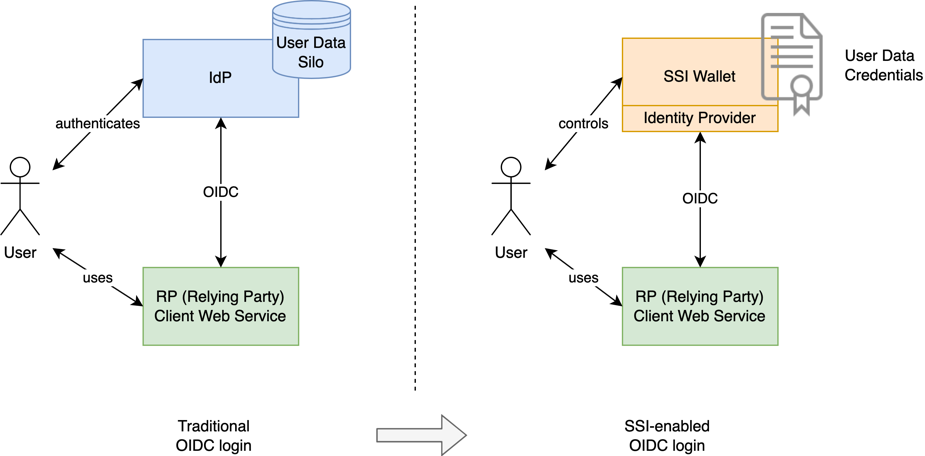

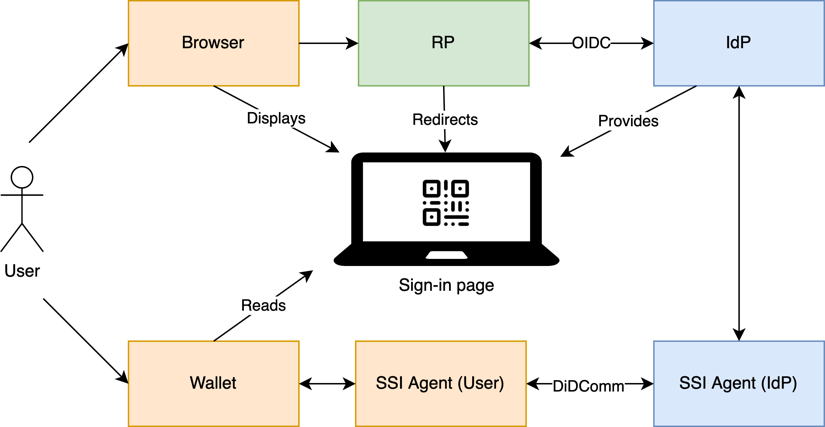

- SSI-Empowered Identity Provider

- Replacing Indy SDK

- The Missing Network Layer Model

- Fostering Interoperability

- Anchoring Chains of Trust

- The Arm Adventure on Docker

- Travelogue

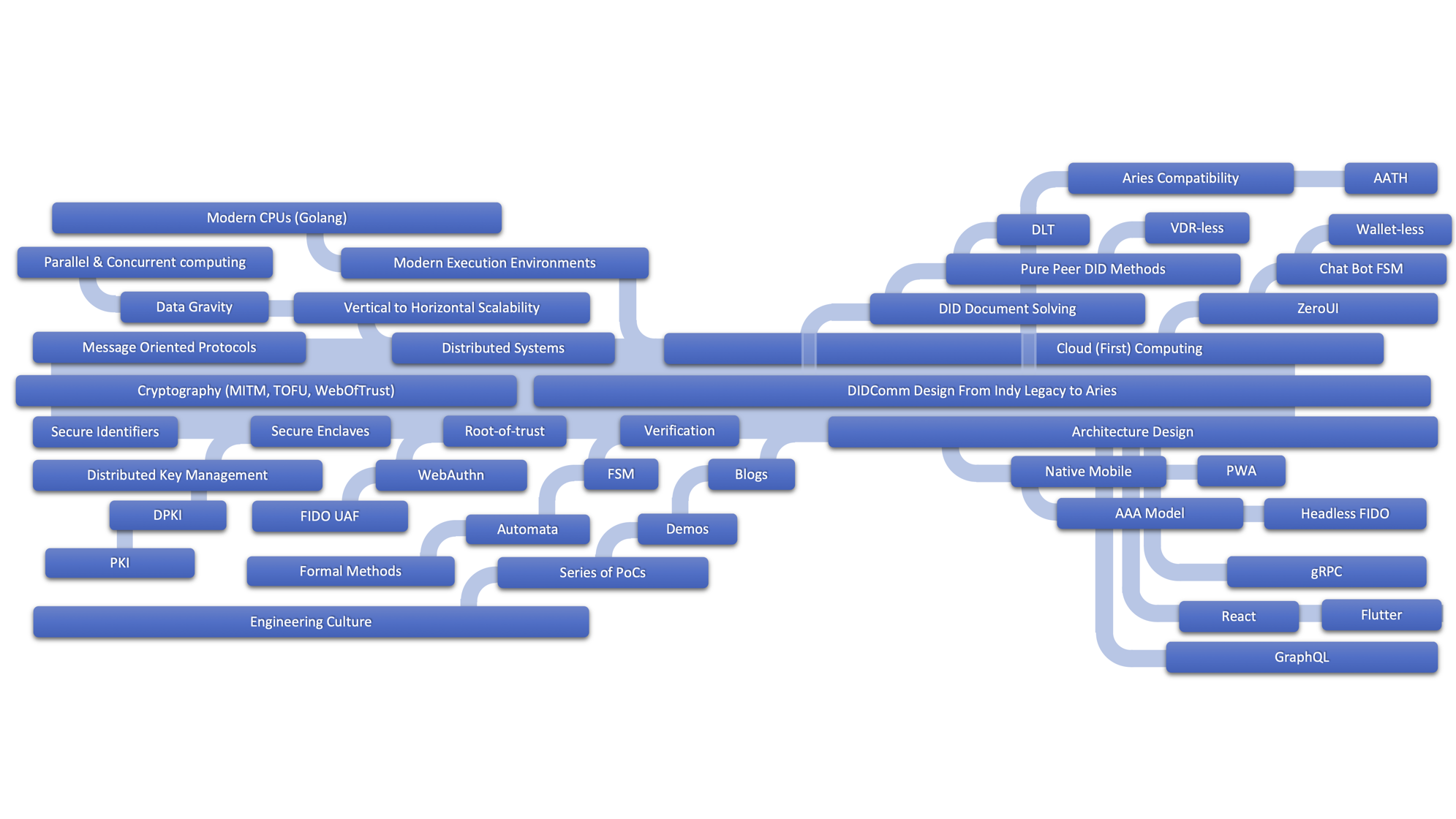

- Announcing Findy Agency

Autonomous LLMs Compromise Open Source Trust

This post is not a critique of LLM-assisted development such as code completion, refactoring aids, or interactive tooling. Those increase developer productivity while preserving understanding and responsibility.

The problem discussed here is autonomous LLM-based code contribution: the generation and submission of code with little or no human understanding, ownership, or accountability.

A Short Reminder: What Historically Drove OSS Contributions?

Historically, open source development was driven by a small but effective set of incentives:

- Developers scratched a personal itch or needed a feature themselves.

- Contributions demonstrated real competence and were valuable in a CV.

- Peer review worked because submitting poor patches carried reputational cost.

- The probability of being exposed as incompetent was non-trivial. (A culture that should drive every enterprise, shouldn’t it?)

Understanding the code was inseparable from contributing to it.

What Changed?

Autonomous LLM use breaks this coupling.

Today, it is possible to:

- Generate large multi-file patches without understanding the codebase.

- Submit frequent PRs that appear productive at first glance.

- Iterate via trial-and-error without learning.

- Walk away with no lasting cost if the PR is rejected or reverted.

The incentives remain (visible activity, CV value), but the cost of failure has

dropped close to zero.

No reputational damage. No accountability. Often no identity continuity.

That asymmetry did not exist before.

Contribution Paths

Pros and Cons (Mostly Cons—for Now)

Pros

Autonomous LLMs can be effective when applied to:

- Mechanical refactoring

- Boilerplate generation

- Test scaffolding

- Bug discovery and exploratory testing

Cons

- Code review becomes significantly harder: intent is unclear.

- Reasoning about correctness degrades when patches lack causal grounding.

- Many PRs fail basic scrutiny and require multiple review cycles.

- Simple fixes are obscured by large, AI-generated diffs.

If autonomous generation worked reliably, PRs would succeed on the first try. They usually don’t. That alone is a strong signal.

A practical lesson emerges:

Artificial cleverness should be applied to tedious, bounded tasks—not to areas

that require deep system understanding.

A Real-World Example

In one project I maintain, a contributor (identity not verified) reported a real bug with an excellent issue description.

What was excellent is that this contributor had delivered a high-quality bug report with the help of AI tools.

The proposed fix, however:

- Introduced ~300 lines of new code

- Increased complexity

- Missed the root cause

The correct fix was a single-line change, i.e., adding a missing function argument.

The contrast is telling: AI helped where it explored and analyzed, but failed where responsibility and understanding were required.

Maintainers Are Already Reacting

Some projects are explicitly responding to this shift:

- Cloud Hypervisor introduced a no–AI-generated-code policy, requiring contributors to attest that submissions are human-authored due to security and licensing concerns. WebProNews + The Register

- Gentoo Linux has banned AI-generated and AI-assisted code contributions, citing copyright, quality, and ethical concerns. The Register

- NetBSD explicitly forbids commits of code created with AI tools. Reddit

- QEMU adopted a policy rejecting contributions containing AI-generated code, in part due to Developer’s Certificate of Origin implications. Open Source Guy

- Across GitHub, maintainers have requested tooling support to block or identify Copilot-generated issues and pull requests to protect review bandwidth. Socket

This is not ideological resistance. It is an operational response.

The Core Issue: Trust (Old and New)

This is ultimately a trust problem.

Open source has always relied on an implicit model:

- Contributors understand what they submit.

- Reviewers can assume intent and competence.

- Bad behavior is eventually visible and costly.

Autonomous LLM-based contribution undermines this by making it cheap to submit code without understanding—and hard to detect when that happens.

Importantly, this trust problem existed even before AI, especially in security-critical projects. AI merely amplifies it.

A working trust model would therefore solve two problems at once: maintainer workload and contribution integrity.

What Could Help?

Tooling platforms could do more. For example:

- Flag patterns associated with autonomous generation in pull requests

- Encourage smaller, auditable changes

- Preserve contributor identity and behavioral continuity

GitHub already offers Copilot to open source maintainers.

Helping maintainers defend their review bandwidth would be an equally

valuable contribution.

What Happens Next?

If nothing changes:

- Maintainers burn out faster.

- Review latency increases.

- Projects become more closed and conservative.

The solution is not rejecting LLMs.

It is restoring responsibility.

Open source does not require perfect code.

But it does require that someone understands—and stands behind—what they submit.

And no amount of autonomy can replace that.

Rethinking SSI

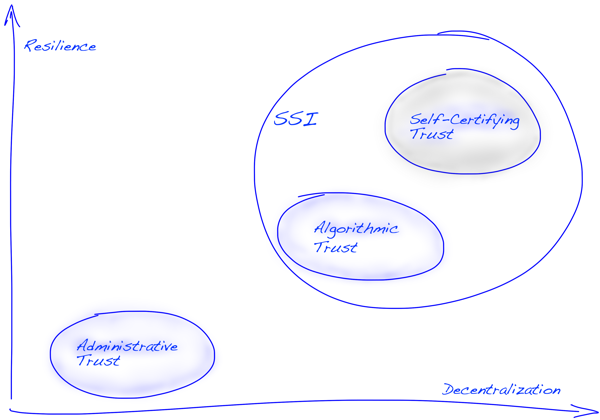

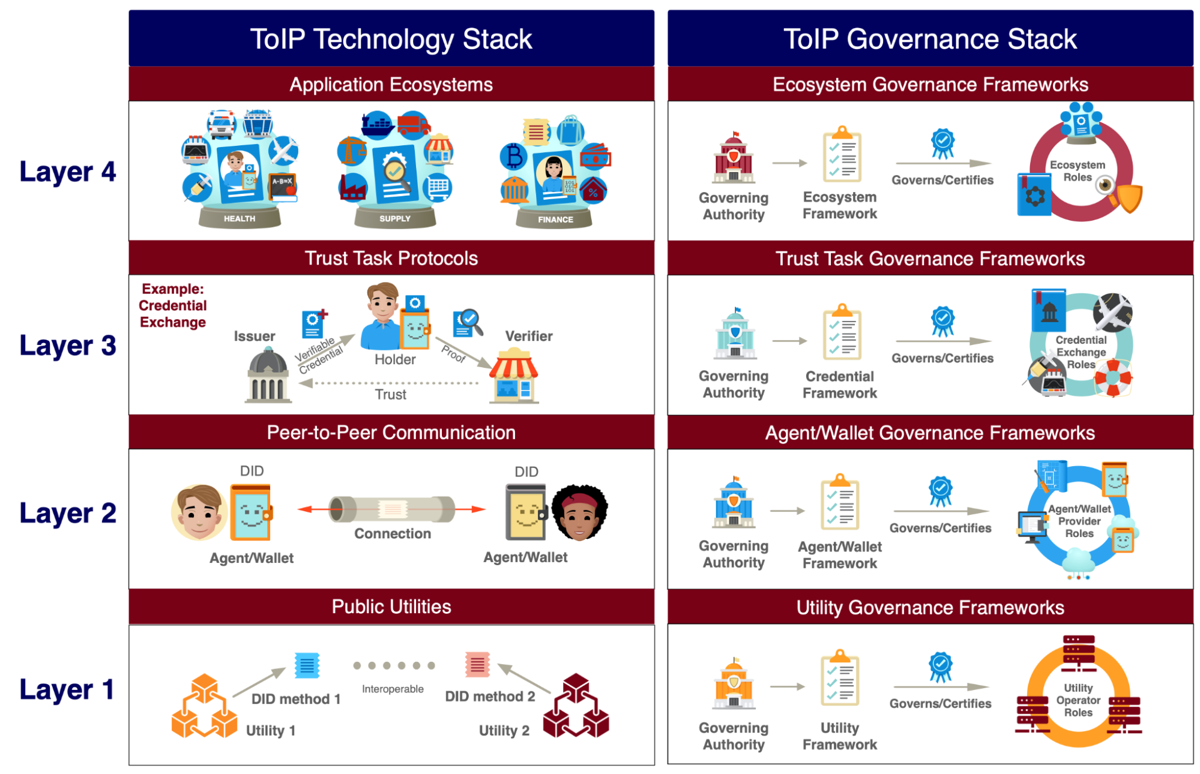

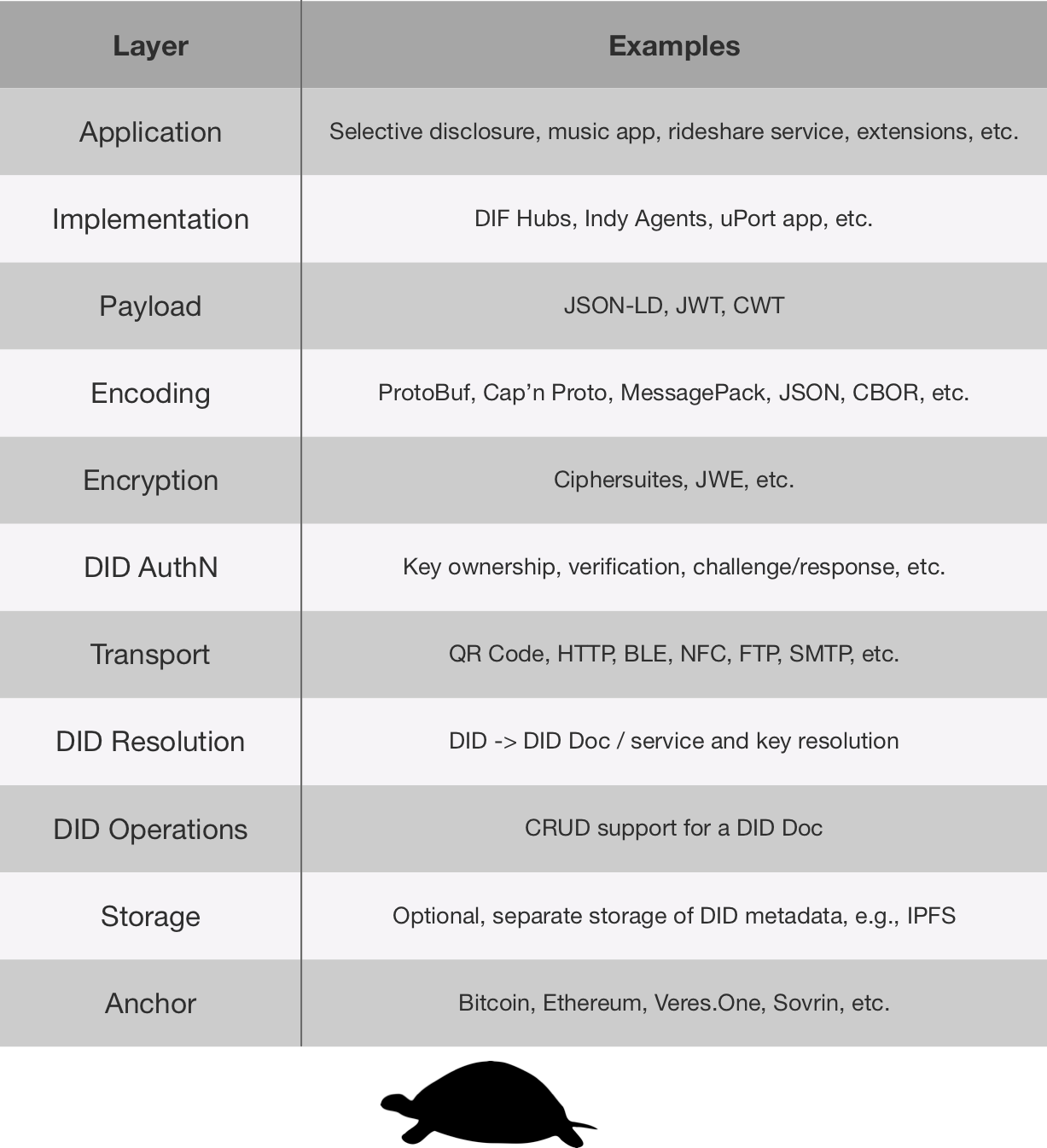

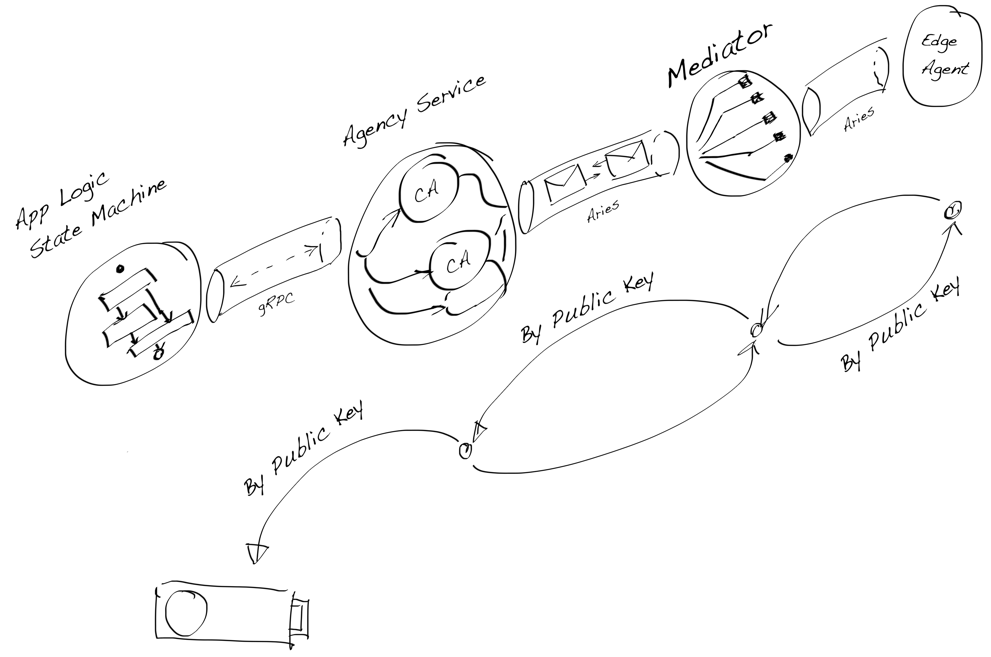

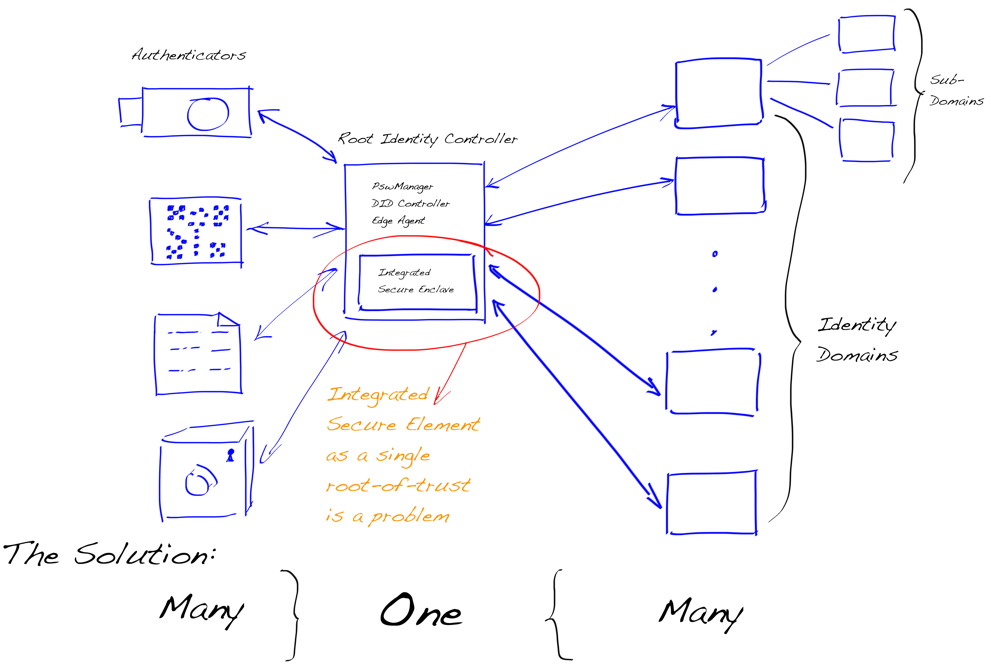

Since blockchain, decentralization has become a buzzword—and many of us still struggle to define what it really means to build fully decentralized systems. One key aspect often overlooked is trust. We cannot claim to have achieved decentralized infrastructure unless we also decentralize the trust model itself. That means going beyond central authorities and even beyond algorithmic “trustless” assumptions, toward Self-Certification as a foundational principle.

In the SSI space, three roots of trust have emerged:

- Administrative trust, as seen in traditional PKI (e.g., certificate authorities).

- Algorithmic trust, exemplified by blockchain-based systems—sometimes called “trustless,” though they still rely on pre-established consensus rules and protocols.

- Self-certifying trust, where entities define and prove trust relationships without requiring centralized or consensus-based validation.

Root of Trust Models — Foundations for Security & Sovereignty

This last model—self-certifying trust—is both the most ambitious and the most necessary if we are to realize the full potential of self-sovereign identity. From our experience, SSI will never become truly decentralized or user-controlled until we solve the challenge of enabling self-certifying roots of trust at scale.

This raises an important question: Should we approach identity-related use cases through an algorithmic zero-trust lens, or should we aim for a decentralized trust model based on self-certification?

Or should we simply build identity around PKI, as seen in current government-led approaches like the mobile Driver’s License (ISO mDL) or the EUDI Wallet? These systems rely on administrative trust models — and while they may not be decentralized, they offer well-understood security, strong governance, and user familiarity.

What seems increasingly clear is that these approaches are not mutually exclusive. To build inclusive, resilient, and user-friendly identity systems, we may need to combine the reliability of PKI, the resilience of algorithmic trust, and the sovereignty of self-certification. The future of identity likely lies in how well we can bridge and blend these trust models — not in picking one over the others.

Algorithmic Zero-Trust

Algorithmic zero-trust is a model where no actor is implicitly trusted, and all entities must continuously prove their authenticity and permissions through cryptographic or logical assertions. It’s common in enterprise security: access control decisions are made by policy engines based on signals like device health, IP reputation, or session risk.

In identity systems, zero-trust often implies constant re-verification, reliance on centralized attestations, and heavy use of encryption and secure channels. While secure and auditable, these systems are inherently closed and non-portable—they rely on predefined relationships and central policy evaluators, making them incompatible with open, permissionless ecosystems.

Zero-trust is effective when scope is limited, infrastructure is known, and risk can be algorithmically modeled. But it doesn’t support user-controlled identity, transitive trust, or cross-domain delegation—key goals in decentralized ecosystems.

Trust Based on Self-Certification

Self-certification flips the model. Instead of requiring a central verifier to approve every interaction, entities prove their claims through cryptographic self-assertions (e.g., signed DIDs, verifiable credentials, blinded signatures). Trust is not assumed—it’s earned or negotiated through transitive relationships and context-based reasoning.

In this model, identity becomes a fabric woven from peer-based attestations, localized trust decisions, and voluntary disclosure. Trust is not enforced by algorithms alone, but emerges from networks of autonomous actors—each defining their own trust domain.

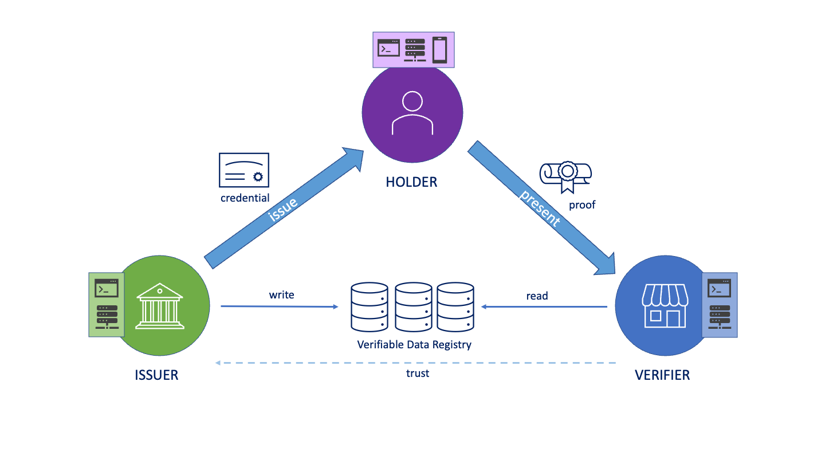

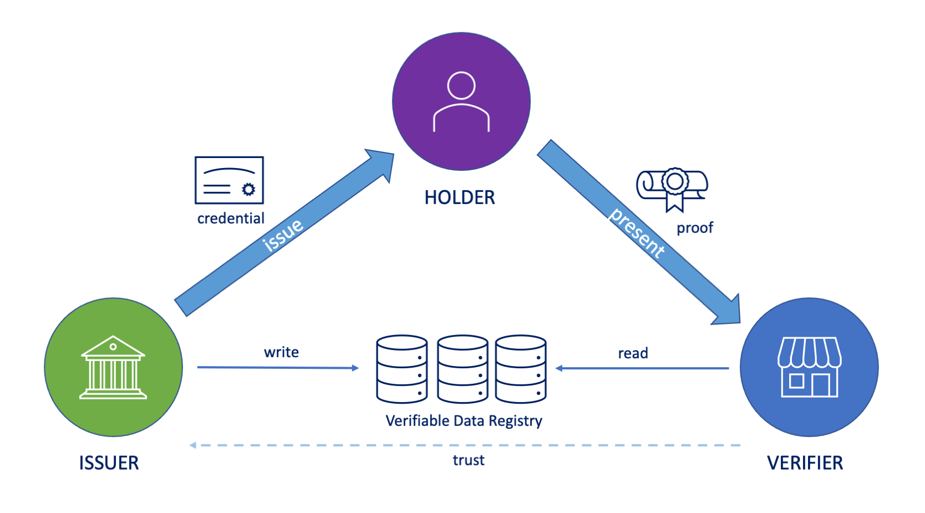

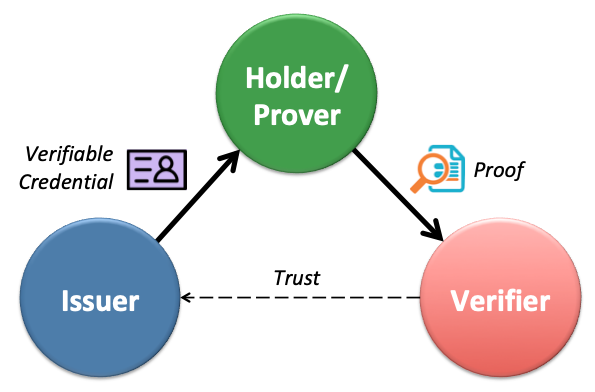

This is the essence of the decentralized trust model: issuers, holders, and verifiers operate without centralized approval or universal agreement. Instead, trust arises through social proofs, credential provenance, and endorsement chains. It enables privacy, sovereignty, and interoperability—but also requires new tooling to reason about trust, detect fraud, and handle revocation and rotation.

Key Learnings from Real-World SSI Projects with Hyperledger Indy

These aren’t just technical findings — they’re observations about what truly matters when designing decentralized identity systems.

Decentralized Trust Is Hard to Scale

While Indy supports decentralized identifiers and verifiable credentials, scaling trust without introducing central authorities remains a major challenge. Transitive trust models — the foundation of any decentralized Web of Trust — lack global context and require custom trust policies per verifier. Bridging isolated trust domains without creating new chokepoints is still an open issue.

Why Symmetric Communication Matters

💡 From Sessions to Relationships

Traditional web services treat identity as a temporary session.

SSI flips the model: identity becomes persistent, portable, and relational.Instead of logging in, users bring their agent.

Instead of onboarding, services recognize credentials.

No accounts. No passwords. Just trust — established cryptographically,

remembered across time and channels.

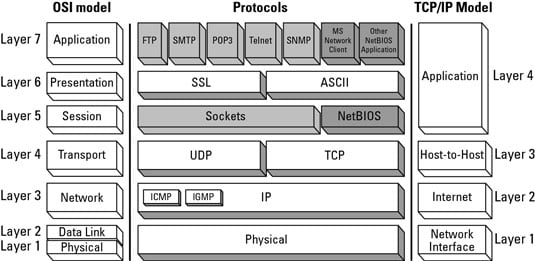

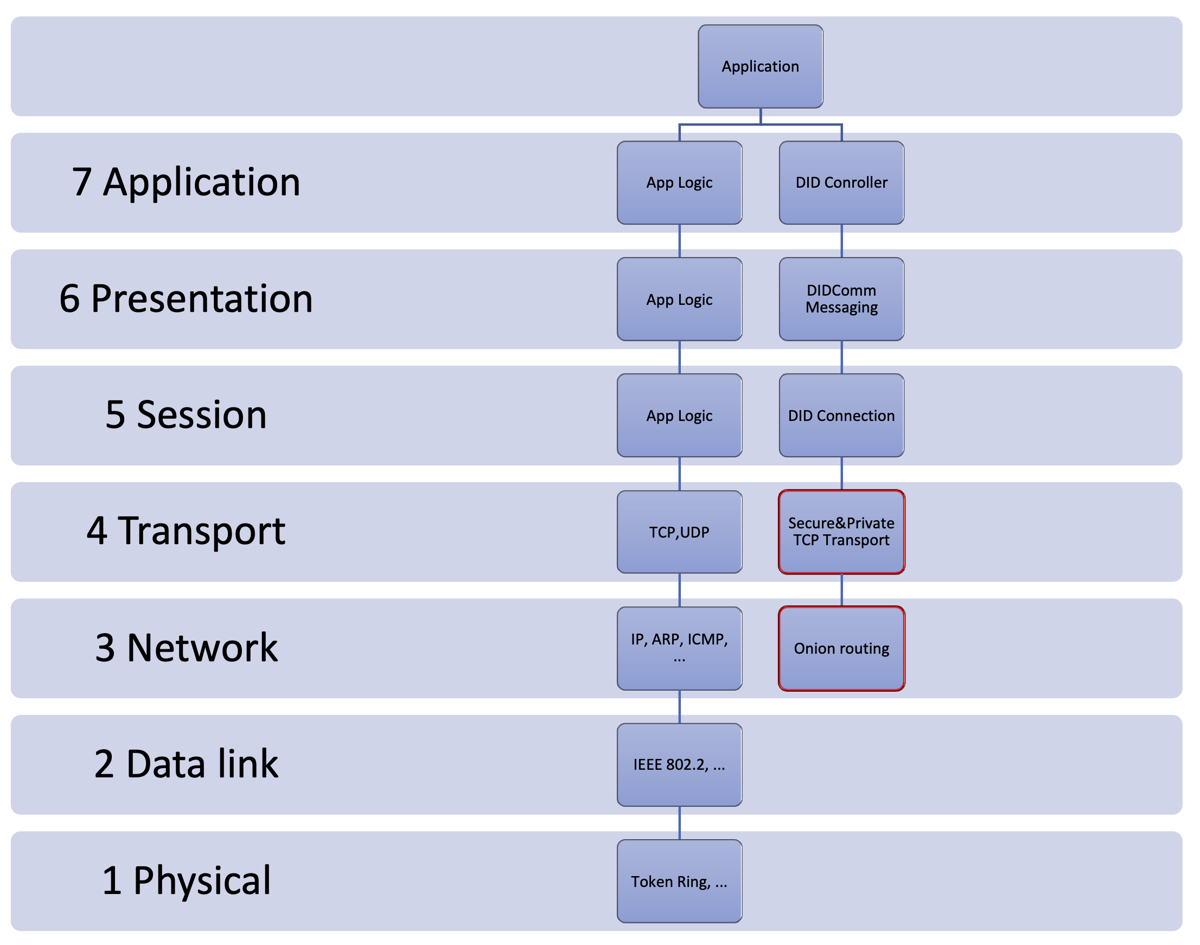

The Internet was built on the client/server model — efficient, scalable, and simple. But it also created a core asymmetry: servers are persistent and authoritative; clients are ephemeral and disposable. This model has made it nearly impossible for users to maintain continuity across interactions without depending on centralized platforms.

Each time a client connects, it must authenticate itself from scratch. Persistent identity lives server-side, and the user is just a temporary session. This has contributed directly to the rise of centralized identity silos and the dominance of platform-centric Web2 services.

What if clients — or more accurately, identity agents — had persistent state of their own? What if they could maintain ongoing relationships with services, carry trust context across channels, and even operate across devices or over time?

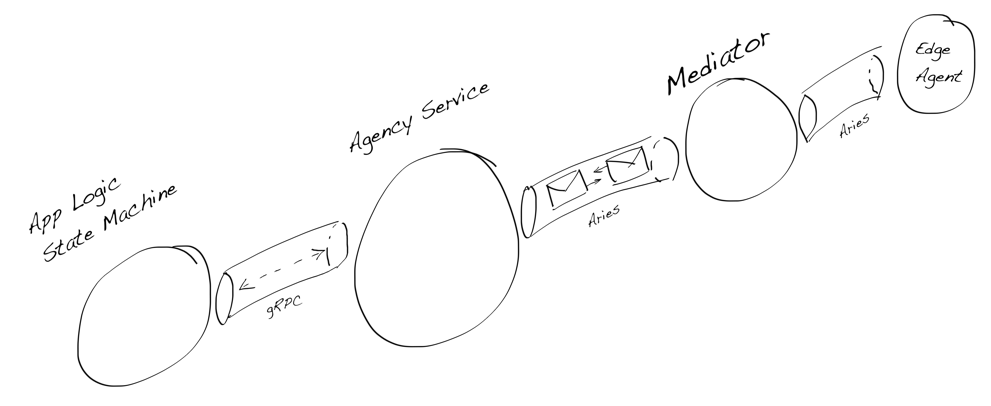

Symmetric, peer-to-peer communication models like DIDComm make this possible. Instead of logging in and starting over, agents can resume where they left off — with long-lived, secure relationships that don’t require sign-ups, onboarding flows, or federated logins. Trust becomes transitive, contextual, and user-controlled.

This model reimagines the client not as a throwaway session but as a sovereign, persistent identity. And it enables a future where servers don’t need to authenticate every visitor — they can simply recognize known agents, verify their credentials, and interact accordingly. No password, no registration — just relationship-based trust.

Privacy Requires Active Design

Surveillance resistance and correlation avoidance aren’t free. Features like DID rotation, pairwise identifiers, and signature blinding are essential to prevent unwanted linkage between interactions. Most deployments still struggle with implementing these practices consistently.

Real Adoption Happens on the Web

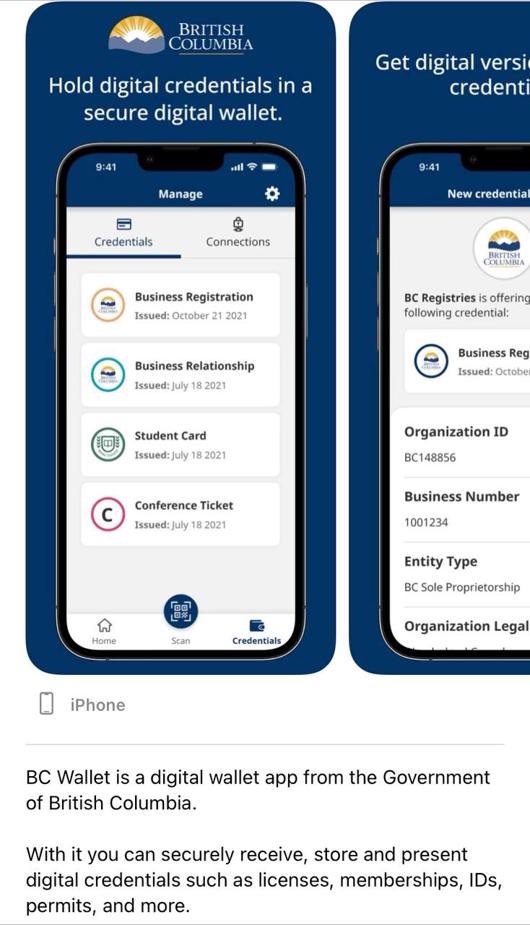

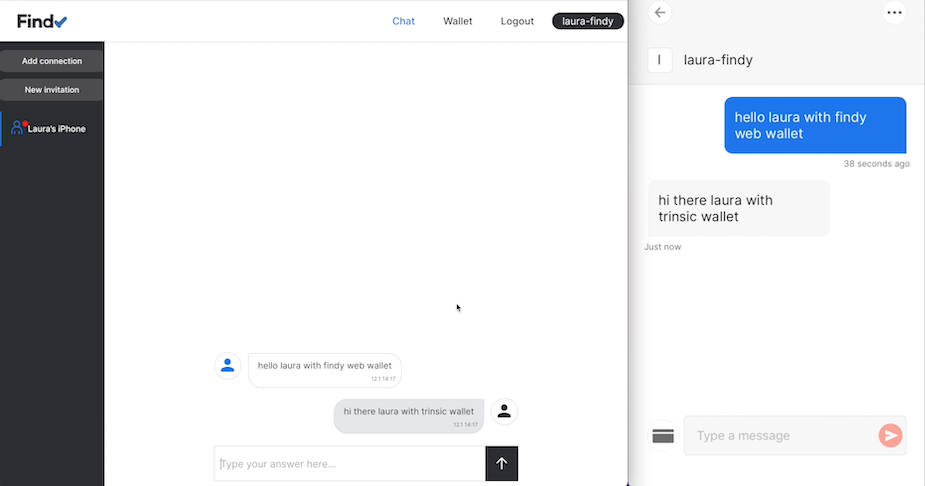

Despite the ideal of sovereign identity wallets, most user adoption we observed (>95%) occurred via web-based identity wallets or embedded browser experiences (e.g., Trinsic). Native mobile or hardware wallets still face barriers to usability and integration.

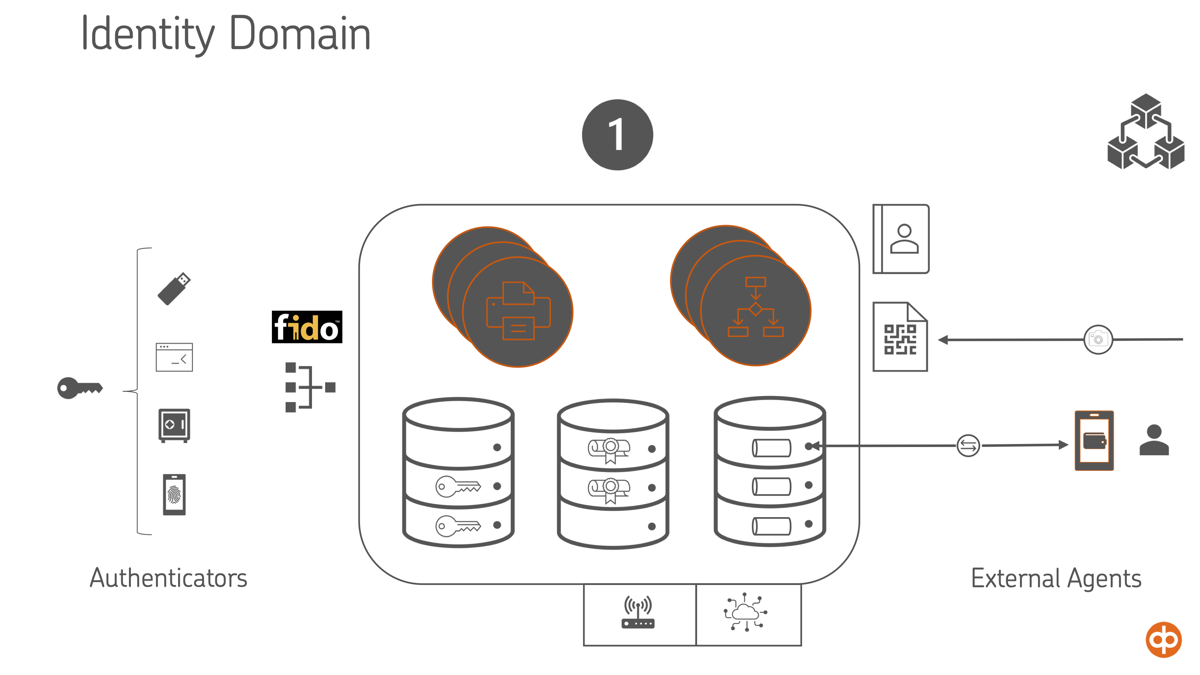

Identity Is More Than Credentials

In advanced use cases, identity extends beyond static credentials. We observed a growing need to bind biometric authenticators, behavioral data, credit scores, and reputational signals into a user’s Identity Domain. This domain becomes the source of trust for both human-facing and machine-mediated interactions.

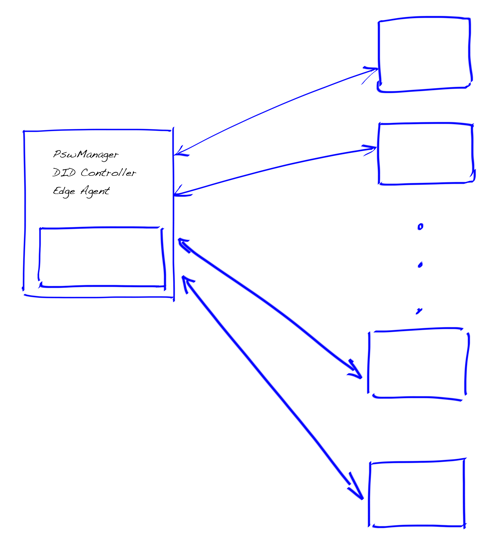

Identity Domain — Spanning Layer and Hub

IoT and Edge Cases Are Not Edge Cases

IoT use cases — such as identity for machines, devices, or wearables — are real and growing. These actors often lack screens, keys, or user interfaces, requiring lightweight agents and trust protocols that work in constrained environments.

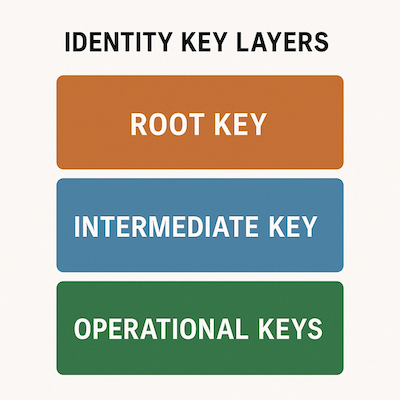

Revocation, Rotation, and Recovery Remain Fragile

Credential revocation, key rotation, and identity recovery are still brittle processes in most SSI systems. While the “SSI rule book” offers theoretical guidelines for these mechanisms, many are not grounded in operational realities or user behavior.

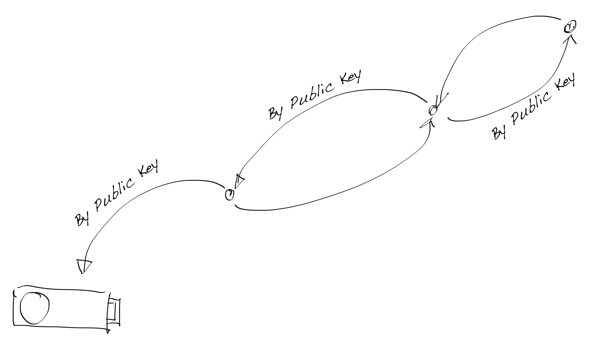

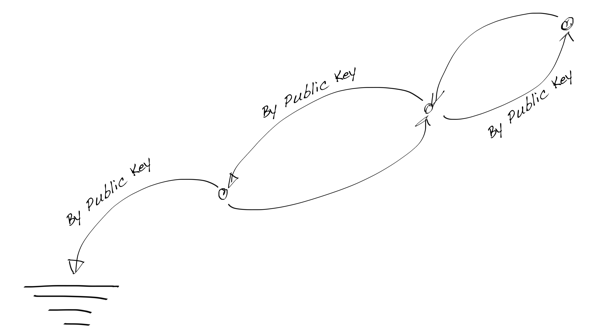

One key insight from our work is that treating key rotation as a holistic identity-level event is a mistake. Lessons from past decentralized systems—such as the PGP Web of Trust and Tor’s onion routing model—show that key hierarchies, delegation, and compartmentalized key usage are essential to managing trust and limiting key exposure over time.

Instead of relying on a single root key for an identity (and rotating it whenever anything changes), we should design systems where operational keys are short-lived, purpose-bound, and easily replaceable, with certification chaining providing continuity of identity without creating a single point of fragility.

Key Hierarchies — Key Certification Chaining

A self-sovereign identity system that cannot manage keys in a nuanced and layered way cannot scale. Identity needs to be resilient, renewable, and gracefully degradable—not brittle or tightly coupled to a single cryptographic anchor.

From Identity Agents to Autonomous Cyber Twins

As identities become programmable, identity agents are evolving into cyber agents — AI-driven entities that can manage trust relationships, negotiate disclosures, and even represent the user autonomously. These cyber twins could become persistent actors in decentralized ecosystems, handling everything from KYC to pseudonymous reputation management.

Looking Ahead

As the identity landscape continues to evolve, one thing is becoming increasingly clear: the need for a decentralized trust model isn’t just philosophical — it’s practical, and soon, existential.

Emerging technologies are rapidly moving toward autonomous, agent-based systems. From AI-powered identity agents to cross-domain agent-to-agent (A2A) protocols, the direction is set: machines will act on our behalf, negotiate access, and manage digital presence — sometimes entirely without human involvement.

But this vision only works if those agents can operate within a trust model that doesn’t require central control, universal agreement, or global consensus. That means trust must be self-certifying, transitive, and portable — grounded in cryptographic proofs and contextual relationships, not gatekeepers.

The biggest opportunity — and risk — lies here. If we solve the problem of self-certifying roots of trust, we unlock the next generation of agent-driven ecosystems: Cyber Twins that are privacy-preserving, pseudonymous, interoperable, and free to negotiate identity on behalf of their creators.

If we don’t, we’ll end up with a future where intelligent agents operate inside walled gardens, under opaque policies, bound by central authorities. That’s not self-sovereign identity — it’s algorithmic feudalism.

The box is opening. Let’s make sure what comes out is free.

The Swiss Army Knife for the Agency Project, Part 3: Other GitHub Tools

This post concludes the article series that overviews how our open-source project utilizes the GitHub platform for software development. In this last part, we will examine documentation, communication tools, and some utilities that help the development process.

GitHub Pages

Already in the early days, when we started to experiment with SSI technology, there arose a need to publish different experiment outcomes and findings to the rest of the community. However, we were missing a platform to collect these various reports and articles.

Finally, when we decided to open-source our agency code, we also established a documentation site and blog. This site has been valuable to us since then. It has worked as a low-threshold publication platform, where we have collected our various opinions, experimentation findings, and even technical guides. It has been a way of distributing information and an excellent source for discussion. It is easy to refer to articles that even date back some years when all of the posts are collected on the same site.

We use the Hugo framework for building the site, specifically the Docsy theme, which is especially suitable for software project documentation. The Hugo framework uses a low-code approach, where most of the content can be edited as Markdown files. However, when needed, modifications can also be made to the HTML pages quite easily.

The project site serves as a platform for documentation and blogs.

We publish the site via GitHub Pages,

a static website hosting solution that

GitHub offers for each repository. When the Pages feature is enabled, GitHub serves

the content of the pages from a dedicated branch.

A GitHub action handles the deployment,

i.e., builds the website and pushes the site files to this dedicated branch. The site URL

combines organization and repository names. An exception is the <organisation-name> .github.io-named

repositories that one can find in the similarly formatted URL, e.g., our

findy-network/findy-network.github.io

repository is served in URL https://findy-network.github.io.

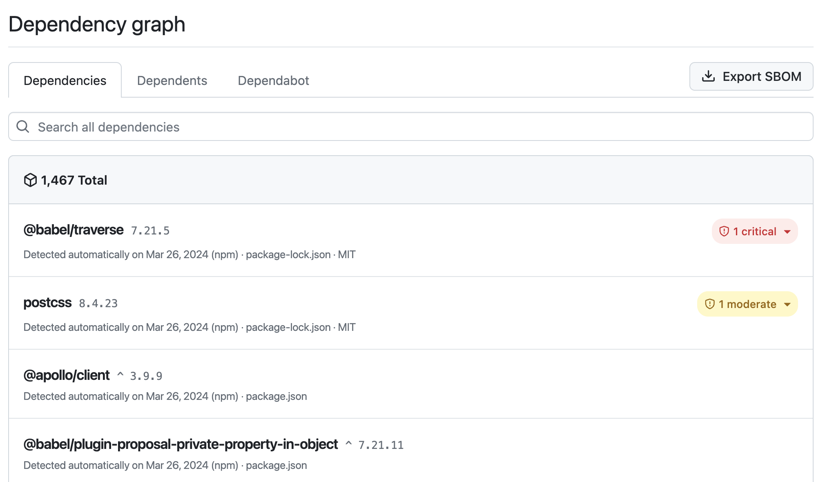

Dependabot

Dependabot is a GitHub-offered service that helps developers with the security and version updates of repository dependencies. When Dependabot finds a vulnerable dependency that can be replaced with a more secure version, it creates a pull request that implements this change. A similar approach exists with dependency version updates: When a new version is released, Dependabot creates a PR with the dependency update to the new version.

Dependabot analyzes the project dependencies based on the dependency graph. It creates PRs for security and version updates automatically.

The pull requests that the Dependabot creates are like any other PR. The CI runs tests on Dependabot PRs similar to those, as with the changes developers have made. One can review and merge the PR similarly.

Using Dependabot requires a comprehensive enough automated test set. The one who merges the PR needs to confirm that the change will not cause regression. Manual testing is required if the CI is missing the automated tests for the changes. If one needs manual testing, the benefit of using the bot for automated PR creation is lost, and it might even be more laborious to update the dependencies one by one.

In our project, we used the principle of testing all the crucial functionality with each change automatically. Therefore, it was safe for us to merge the Dependabot PRs without manual intervention. We even took one step further. We enabled automatic merging for those Dependabot PRs that passed the CI. In this approach, a theoretical risk exists that malicious code is injected from the supply chain through the version updates without us noticing it. However, we were willing to take the risk as we estimated that sparing a small team’s resources would be more important than shielding the experimental code from theoretical attacks.

You should estimate the risk/effort ratio when automating crucial tasks.

CI enables the automatic merging of Dependabot PRs on pull request creation:

name: "pr-target"

on:

pull_request_target:

# automerge successful dependabot PRs

dependabot:

# action permissions

permissions:

pull-requests: write

contents: write

# runner machine

runs-on: ubuntu-latest

# check that the PR is from dependabot

if: ${{ github.event.pull_request.user.login == 'dependabot[bot]' }}

steps:

- name: Dependabot metadata

id: dependabot-metadata

uses: dependabot/fetch-metadata@v2

- name: Enable auto-merge for Dependabot PRs

# check that target branch is dev

if: ${{steps.dependabot-metadata.outputs.target-branch == 'dev'}}

# enable PR auto-merge with GitHub CLI

# PR will be automatically merged if all checks pass

run: gh pr merge --auto --merge "$PR_URL"

env:

PR_URL: ${{github.event.pull_request.html_url}}

GH_TOKEN: ${{secrets.GITHUB_TOKEN}}

Notifications And Labeling

There are chat application integrations (for example Teams, Slack) one can use to send automatic notifications from GitHub. We have used this feature to get notified when PRs are created and merged to keep the whole team up-to-date on what changes are introduced. In addition, we always get a notification when someone raises an issue in our repositories. It has allowed us to react quickly to community questions and needs.

There have also been some problems regarding the notifications. The Dependabot integration creates a lot of pull requests for several repositories, and sometimes, the information flood is too much. Therefore, we have custom labeling functionality that helps us automatically label PRs based on the files the changes affect. The automatic labeling is implemented with GitHub Action, which is triggered on PR creation. We can filter only the PR notifications needed for our chat integration using these labels.

Automatic labeling can be tremendously helpful, especially with large repositories with many subprojects. Labels can also be used as a filter basis for GitHub Actions. For instance, one can configure a specific test for changes that affect only a particular folder. This approach can speed up the CI runtimes and save resources.

Codespaces And CLI Tool

GitHub Codespaces was a less-used feature for our project. Still, we found it helpful, especially when we organized workshops to help developers get started with agency application development.

Codespaces offers a cloud-based development environment that works as VS Code dev containers. A repository can have a configuration for the environment, and the codespace is created and launched based on that configuration. Once the codespace is up and running, the developers have a ready development environment with all the needed bells and whistles. It removes a lot of the setup hassle we usually encounter when using different operating systems and tools for development.

Example of a codespace configuration for our workshop participants. All the needed tools are preinstalled to the codespace that one may need when doing the workshop exercises:

{

"name": "Findy Agency workshop environment",

"image": "ghcr.io/findy-network/agency-workshop-codespace:0.1.0",

"workspaceFolder": "/home/vscode/workshop",

"features": {

"ghcr.io/devcontainers-contrib/features/direnv-asdf:2": {}

},

"customizations": {

"vscode": {

"extensions": [

"golang.go",

"mathiasfrohlich.Kotlin"

]

}

},

"postCreateCommand": "asdf direnv setup --shell bash --version latest"

}

The GitHub CLI tool was another helpful tool we found during the agency project. It offers the GitHub web application functionality via the command line. The tool is especially useful for those who suffer from context switching from terminal to browser and vice versa or who prefer to work from a terminal altogether.

In addition, the CLI tool can automate certain routines, both in CI and local environments. We use the Makefile to record often-used commands to support developers’ memory. The CLI is also a great fit for playing out with the GitHub API.

Example of listing PRs with the GitHub CLI tool.

Thanks for Reading

This article completes our three-part saga of using GitHub in our agency project. I hope you have learned something from what you have read so far. I am always happy to discuss our approach and hear if you have ideas and experiences on how your team handles things!

The Swiss Army Knife for the Agency Project, Part 2: Release Management with GitHub

This article will examine how we handle releasing and artifact delivery with GitHub tools in our open-source project. When designing our project’s release process, we have kept it lightweight but efficient enough. One important goal has been ensuring that developers get a stable version, i.e., a working version of the code, whenever they clone the repository. Furthermore, we want to offer a ready-built deliverable whenever possible so that trying out our software stack is easy.

Branching Model

In our development model, we have three different kinds of branches:

- Feature branches, a.k.a. pull request branch: each change is first pushed to a feature branch,

tested, and possibly reviewed before merging it to the

devbranch. dev: the baseline for all changes. One merges pull requests first with this branch. If tests fordevsucceed, one can merge the changes into themasterbranch. Thedevbranch test set can be more extensive than the one run for the pull request (feature) branch.master: the project’s main branch that contains the latest working version of the software. Changes to this branch trigger automatic deployments to our cloud environment.

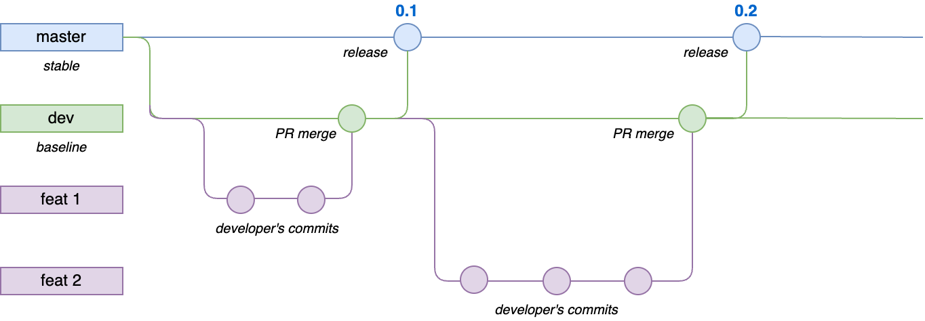

Branching model has three branches: feature branches, dev, and master.

The primary reason for using this branching style is to enable us to run extensive automated testing

for changes introduced by one or multiple pull requests before merging them with the master branch.

Therefore, this routine ensures that the master branch always has a working version.

This popular branching model has other perks as well. You can read more about them

here, for example.

Time for a Release

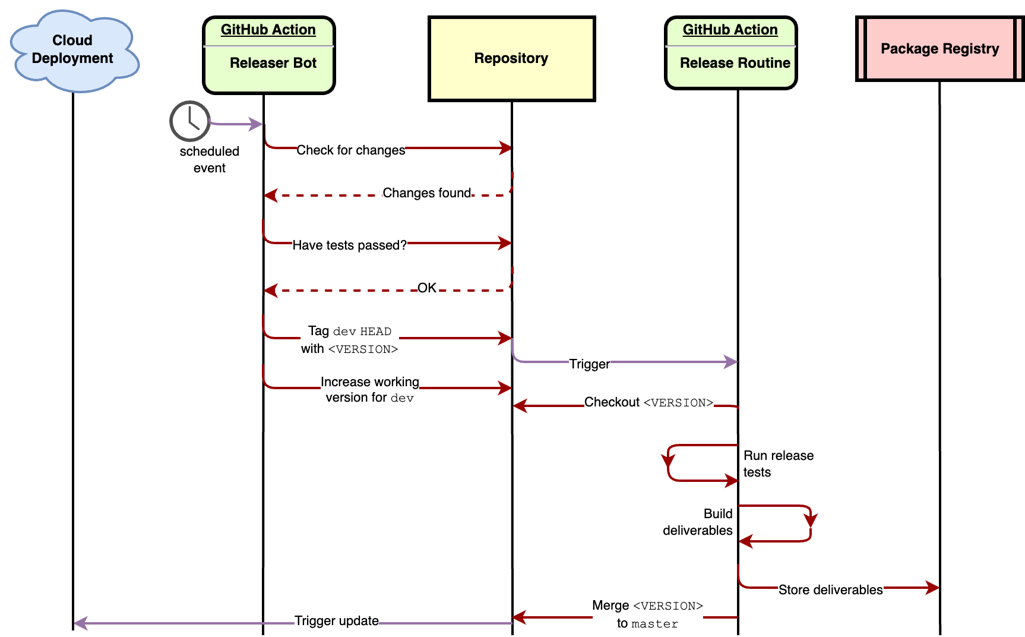

Our release bot is a custom GitHub action.

Each repository that follows the branching model described

above uses the bot for tagging the release. The bot’s primary duty is to check if the dev branch

has code changes missing from the master branch. If the bot finds changes, it will create a version

tag for the new release and update the working version of the dev branch.

The bot works night shifts and utilizes a GitHub scheduled event as an alarm clock. For each repository it is configured for, it runs the same steps:

- Check if there are changes between the

devandmasterbranches - Check if the required workflows (tests) have succeeded for the

devbranch - Parse the current working version from the

VERSIONfile - Check out the

devbranch and tag thedevbranchHEADwith the current version - Push tag to remote

- Increase the working version and commit it to the

dev-branch.

When step 5 is ready, i.e., the bot has created a new tag, another workflow will start.

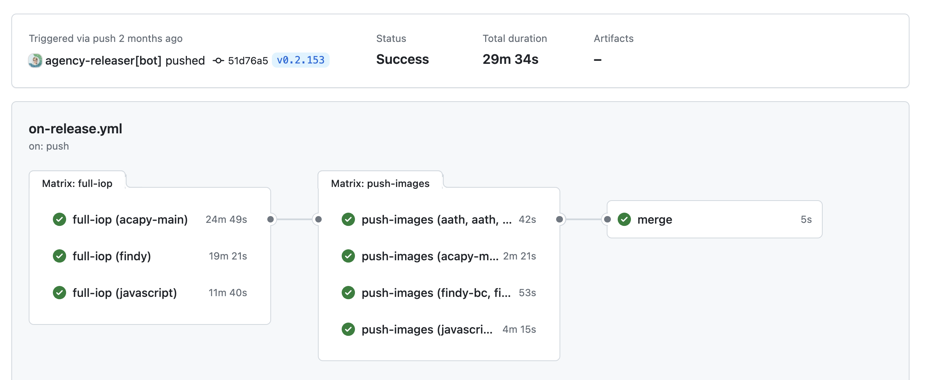

This workflow will handle building the project deliverables for the tagged release.

After a successful release routine, the CI merges the tag to master.

Changes are updated nightly from dev to master.

Package Distribution

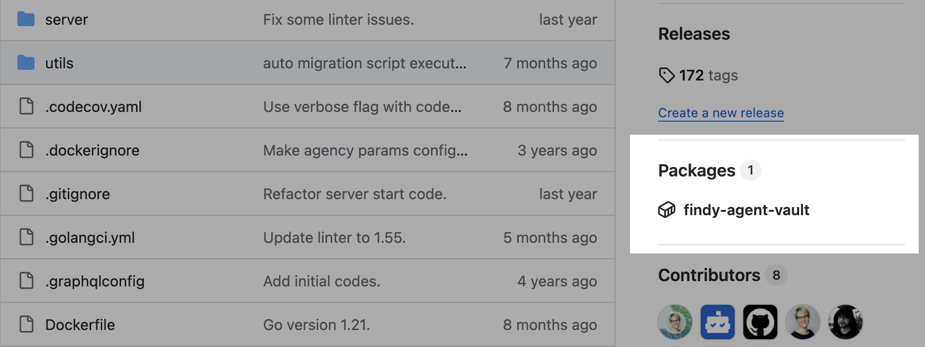

Each time a release is tagged, the CI builds the release deliverables for distribution. As our stack contains various-style projects built in various languages, the release artifacts depend on the project type and programming language used.

One can navigate to linked packages from the repository front page.

The CI stores all of the artifacts in GitHub in one way or another. Docker images and library binaries utilize different features of GitHub Packages, a GitHub offering for delivering packages to the open-source community. The Packages UI is integrated directly with the repository UI, so finding and using the packages is intuitive for the user.

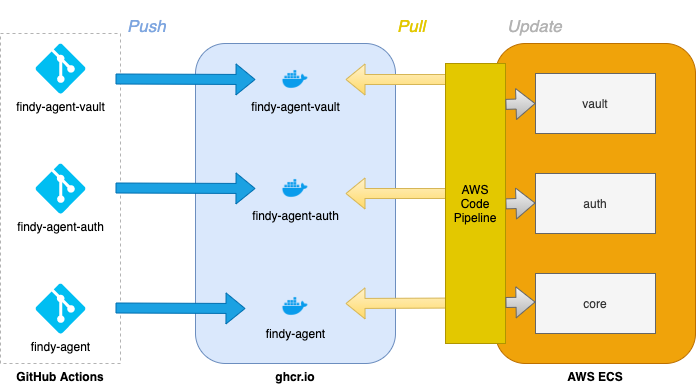

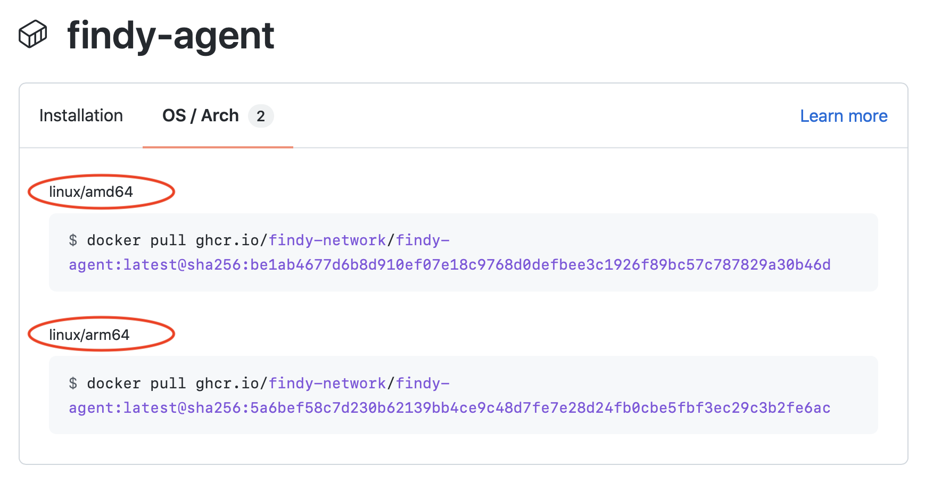

Docker Containers for Backend Services

Our agency software has a microservice architecture, meaning multiple servers handle the backend functionality. To simplify cloud deployment and service orchestration in a local development environment, we build Docker images for each service release and store them in the GitHub container registry.

The GitHub Actions workflow handles the image building. We build two variants of the images. In addition to amd64, we make an arm64 version to support mainly environments running on Apple-silicon-based Macs. You can read more about utilizing GitHub Actions to create images for multiple platforms here.

The community can access the publicly stored images without authentication.

The package namespace is https://ghcr.io, meaning one can refer to an image with the path

ghcr.io/NAMESPACE/IMAGE_NAME:TAG, e.g., ghcr.io/findy-network/findy-agent:latest.

Publishing and using images from the Docker registry is straightforward.

Libraries

We also have utility libraries that provide the common functionalities needed to build clients for our backend services. These include helpers for Go, Node.js, and Kotlin.

In the Go ecosystem, sharing modules is easy. The Go build system must find the dependency code in a publicly accessible Git repository. Thus, the build compiles the code on the fly; one does not need to distribute or download binaries. Module versions are resolved directly from the git tags found in the repository.

The story is different for Node.js and Kotlin/Java libraries. GitHub offers registries for npm, Maven, and Gradle, and one can easily integrate the library’s publishing to these registries into the project release process. However, accessing the libraries is more complicated. Even if the package is public, the user must authenticate to GitHub to download the package. This requirement adds more complexity for the library user than mainstream options, such as the default npm registry. I would avoid distributing public libraries via this feature.

Sample for publishing Node.js package to GitHub npm registry via GitHub action:

name: release

on:

push:

tags:

- '*'

jobs:

publish-github:

# runner machine

runs-on: ubuntu-latest

# API permissions for the job

permissions:

contents: read

packages: write

steps:

# checkout the repository code

- uses: actions/checkout@v4

# setup node environment

- uses: actions/setup-node@v4

with:

node-version: '18.x'

registry-url: 'https://npm.pkg.github.com'

scope: 'findy-network'

# install dependencies, build distributables and publish

- run: npm ci

- run: npm run build

- run: npm publish

env:

NODE_AUTH_TOKEN: ${{ secrets.GITHUB_TOKEN }}

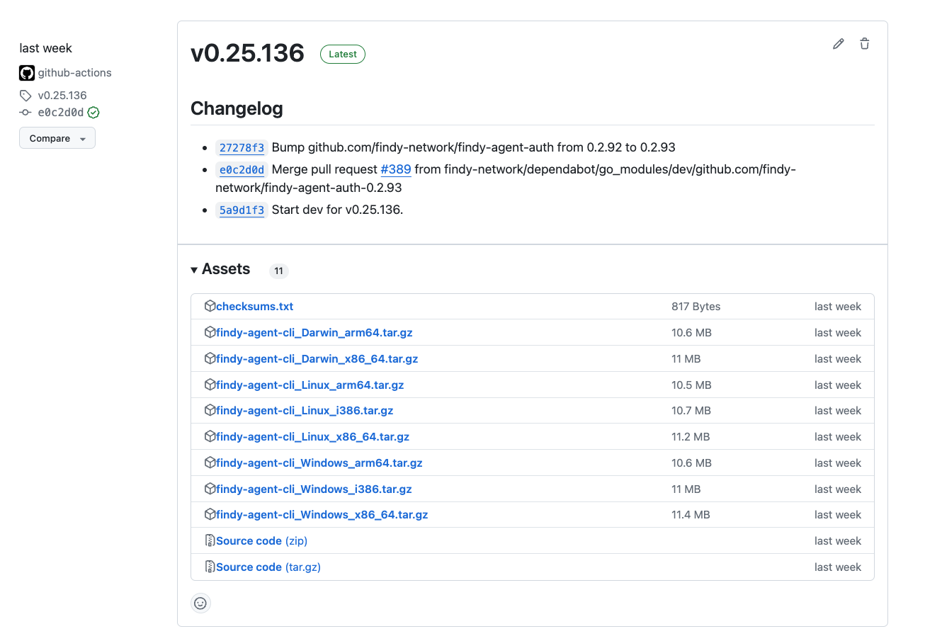

Executables And Other Artifacts

For other artifacts, we utilize

the GitHub releases feature.

For instance, we use the goreleaser

helper tool for our CLI,

which handles the cross-compilation of the executable for several platforms.

It then attaches the binary files to the release,

from where each user can download them.

We even have an automatically generated installation script that downloads the correct

version for the platform in question.

CLI release has binaries for multiple platforms.

One can also attach other files to releases. We define our backend gRPC API with an IDL file. Whenever the API changes, we release a new version of the IDL using a GitHub release. We can then automate other logic (gRPC utility helpers) to download the IDL for a specific release and easily keep track of the changes.

Summary

This post summarized how our open-source project uses different GitHub features for versioning, release management, and artifact distribution. In our last article, we will review various other GitHub tools we have utilized.

The Swiss Army Knife for the Agency Project, Part 1: GitHub Actions

Our research project has been utilizing GitHub tools for several years. Most of the features are free for publicly hosted open-source projects. We have used these tools with an experimental spirit, gathering experience from the different features. Although our project does not aim directly at production, we take a production-like approach to many things we do.

In this article series, I summarize our team’s different experiments regarding GitHub features. As GitHub’s functionality is easily extensible through its API and abilities for building custom GitHub actions, I also explain how we have built custom solutions for some needs.

The series consists of three articles:

- This first part overviews GitHub Actions as a continuous integration platform.

- The second part concentrates on release management and software distribution.

- Finally, in the last post, we introduce other useful integrations and features we used during our journey.

CI Vets the Pull Requests

GitHub Actions is a continuous integration and

continuous delivery (CI/CD) platform

for building automatic workflows. One defines the workflows as YAML configurations,

which get stored along with repository code in a dedicated subfolder (.github/workflows).

The GitHub-hosted platform automatically picks up and executes these workflows

when defined trigger events happen.

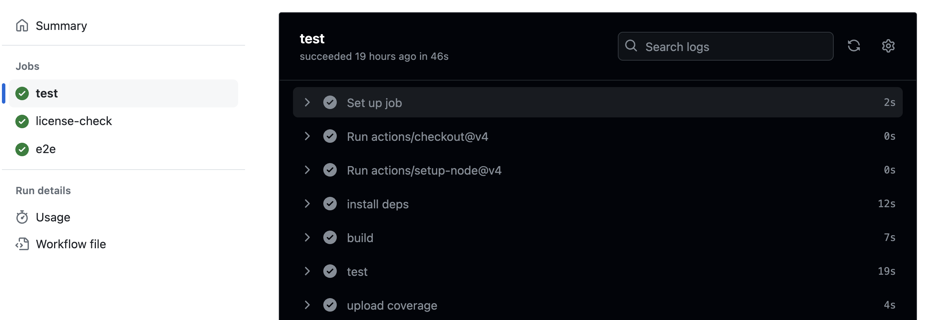

A workflow can contain multiple jobs. Each workflow job consists of different steps. The logs from the steps can be browsed in the Actions UI.

Snippet below shows how the “test” job is configured:

name: test

on:

# run whenever there is a push to any branch

push:

jobs:

test:

# runner machine

runs-on: ubuntu-latest

steps:

# check out the repository code

- uses: actions/checkout@v4

# setup node environment

- uses: actions/setup-node@v4

with:

node-version: '18.x'

# install dependencies, build, test

- name: install deps

run: npm ci

- name: build

run: npm run build

- name: test

run: npm test

# upload test coverage to external service

- name: upload coverage

uses: codecov/codecov-action@v4

with:

files: ./coverage/coverage-final.json

fail_ci_if_error: true

token: ${{ secrets.CODECOV_TOKEN }}

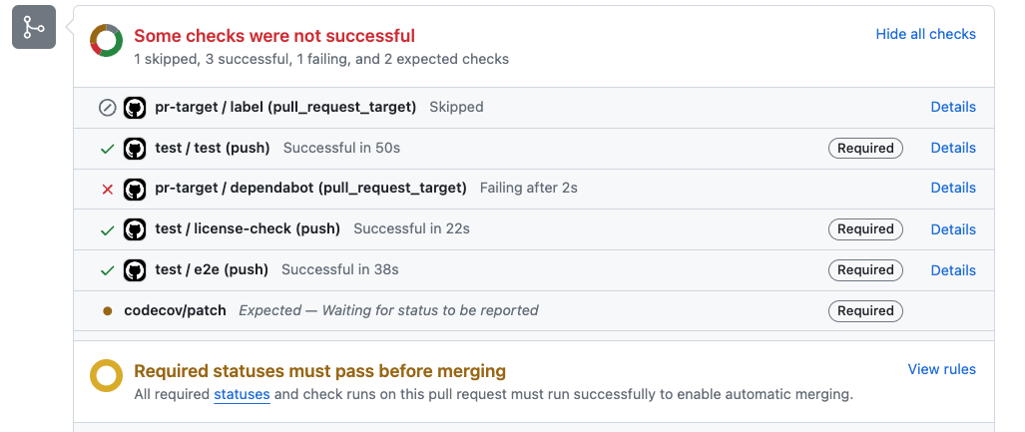

In our project development model, developers always introduce changes through a pull request. CI runs a collection of required checks for the pull request branch, and only when the checks succeed can the changes be integrated into the main development branch.

CI checks are visible in the pull request view.

Our code repositories typically have a dedicated workflow for running linting, unit testing, license scanning, and e2e tests. CI runs these workflow jobs whenever the developer pushes changes to the PR branch. The CI jobs are visible in the PR view, and merging is impossible if some required checks fail.

Actions Simplify the Workflows

The basic structure of a GitHub Actions job definition contains:

- Setting up the base environment for the workflow.

- Checking out the repository code.

- Execution steps that one can define as command-line scripts or actions.

Actions are reusable components written to simplify workflows. GitHub offers actions for the most commonly needed functionalities. You can also create custom actions specific to your needs or search for the required functionality in the actions marketplace, where people can share the actions they have made.

Example of using a custom action in a workflow:

name: test

on: push

jobs:

test:

runs-on: ubuntu-latest

steps:

- name: setup go, lint and scan

uses: findy-network/setup-go-action@master

with:

linter-config-path: .golangci.yml

For instance, we have written a custom action for our Go code repositories. These repositories need similar routines to set up the Go environment, lint the code, and check its dependencies for incompatible software licenses. We have combined this functionality into one custom action we use in each repository. This way, the workflow definition is kept more compact, and implementing changes to these routines is more straightforward as we need to edit only the shared action instead of all the workflows.

Custom action configuration example:

runs:

using: "composite"

steps:

# setup go environment

- uses: actions/setup-go@v5

if: ${{ inputs.go-version == 'mod' }}

with:

go-version-file: './go.mod'

- uses: actions/setup-go@v5

if: ${{ inputs.go-version != 'mod' }}

with:

go-version: ${{ inputs.go-version }}

# run linter

- name: golangci-lint

if: ${{ inputs.skip-lint == 'false' }}

uses: golangci/golangci-lint-action@v4

with:

version: ${{ inputs.linter-version }}

args: --config=${{ inputs.linter-config-path }} --timeout=5m

# run license scan

- name: scan licenses

shell: bash

if: ${{ inputs.skip-scan == 'false' }}

run: ${{ github.action_path }}/scanner/scan.sh

In this shared action, setup-go-action,

we can run our custom scripts or use existing actions.

For example, we set up the Go environment with actions/setup-go,

which is part of

the GitHub offering. Then, we lint the code using

the golangci/golangci-lint action,

which is made available by the golangci-team. Lastly, the action utilizes our script to scan

the licenses. We can efficiently combine and utilize functionality crafted by others and ourselves.

Runners Orchestrating E2E-tests

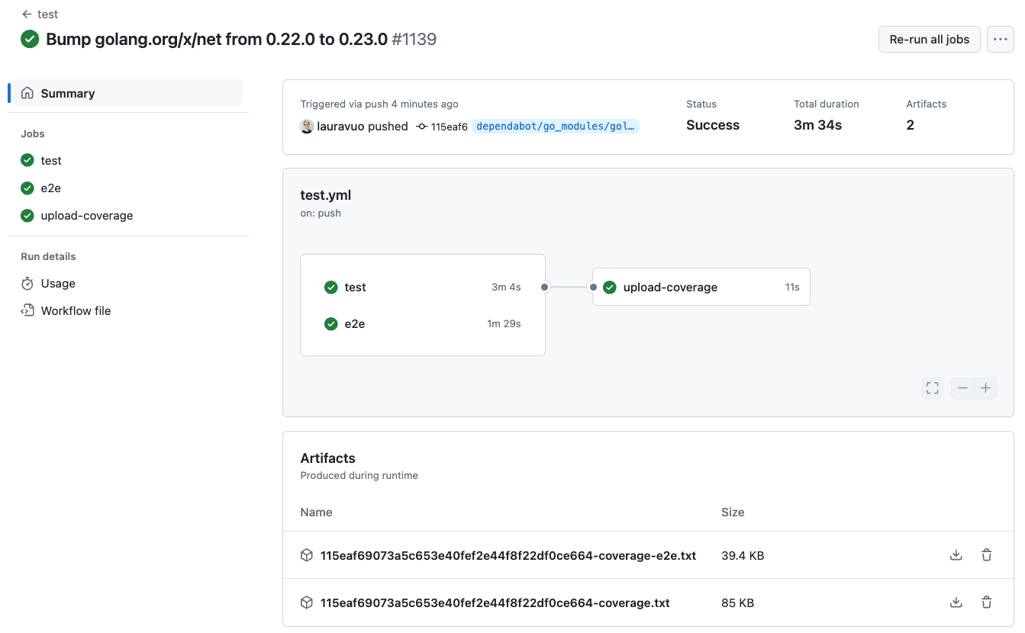

GitHub Actions workflow view run shows the jobs and their hierarchy. The artifacts and logs are available for each job also in this view.

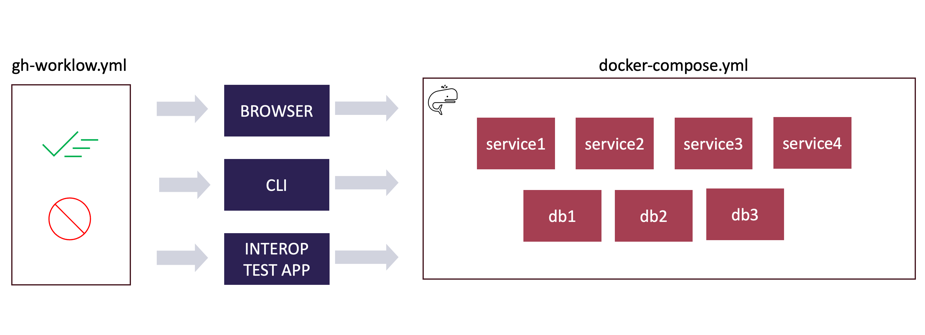

In addition to linting, scanning, and unit tests, we typically run at least one e2e-styled test in parallel. In e2e tests, we simulate the actual end-user environment where the software will be run and execute tests with bash scripts or test frameworks that allow us to run tests through the browser.

Workflows can contain multiple jobs that run in parallel or sequentially. In this example, we have utilized the matrix strategy to run the same job with different parameters.

In these e2e tests, we typically set up the test environment using Docker containers, and the GitHub-hosted runners have worked well for orchestrating the containers. We can define needed container services in the workflow YAML configuration or use, e.g., Docker’s compose tool directly to launch the required containers.

Example of defining a needed database container in a GitHub Actions workflow:

services:

postgres:

image: postgres:13.13-alpine

ports:

- 5433:5432

env:

POSTGRES_PASSWORD: password

POSTGRES_DB: vault

External Integrations

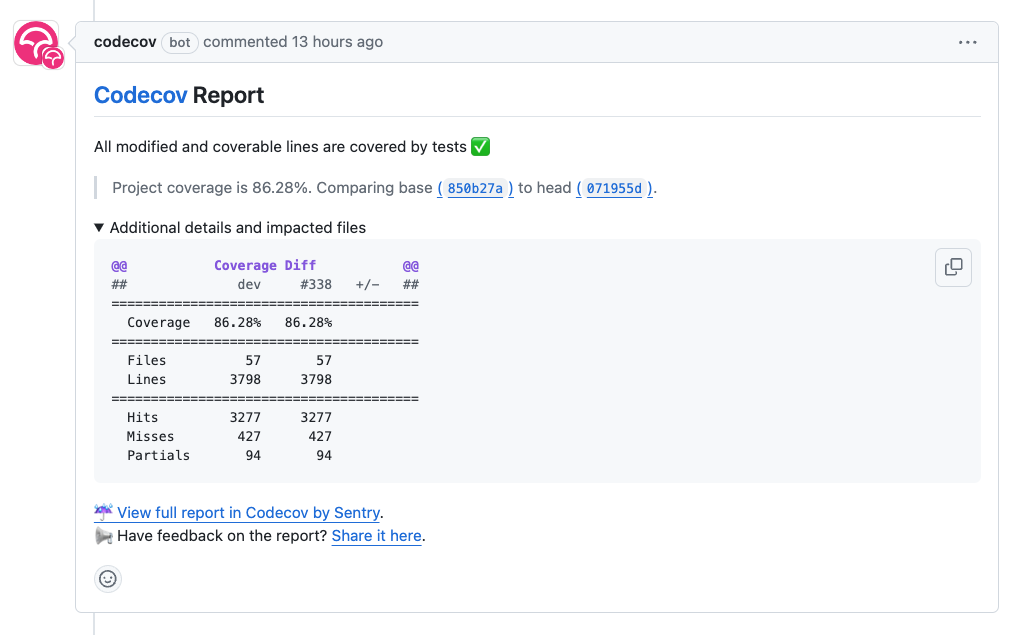

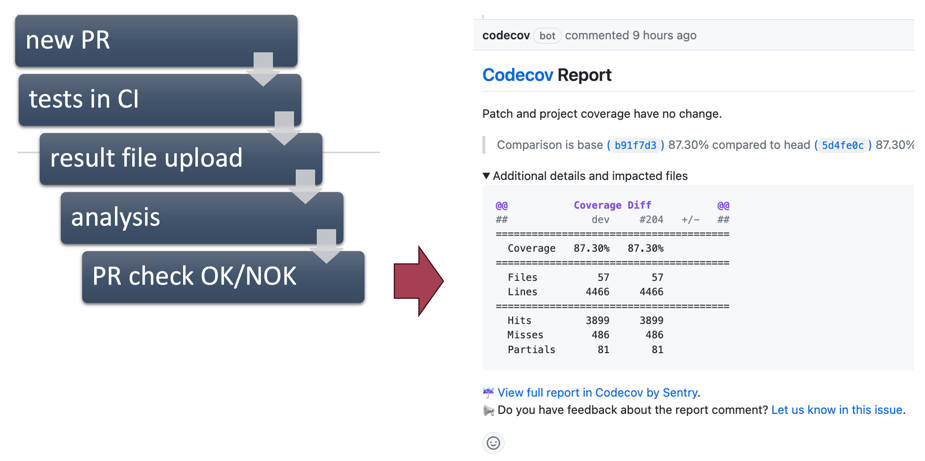

Once the CI has run the tests, we use an external service to analyze the test coverage data. Codecov.io service stores the coverage data for the test runs. It can then use the stored baseline data to compare the test run results of the specific pull request. Once the comparison is ready, it gives feedback on the changes’ effect on the coverage. The report gets visualized in the pull request as a comment and line-by-line coverage in the affected code lines.

Codecov.io inserts the coverage report as a comment to the pull request view through the GitHub API.

Codecov is an excellent example of integrating external services into GitHub Actions workflows. Each integration needs a GitHub application that gives the third-party tool access to your organization’s resources. The organization must review the required permissions and install the application before GitHub allows the integration to work. One can also build GitHub applications for custom purposes. Read more about how a GitHub application can be helpful when automating release versioning.

Next Up: Release Management

This article gave an overview of how Findy Agency uses GitHub Actions as a continuous integration platform. The next one will be about release management. Stay tuned.

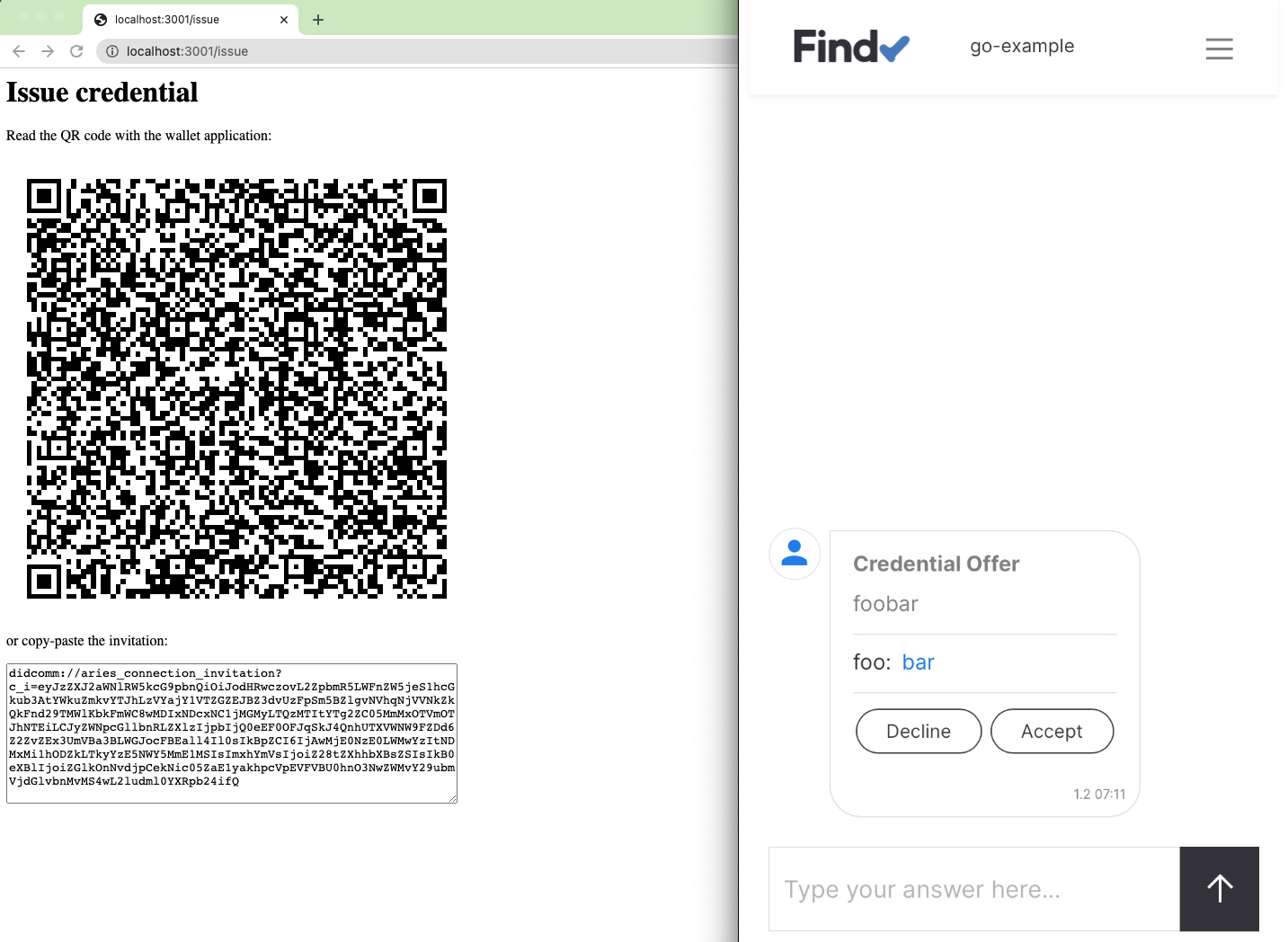

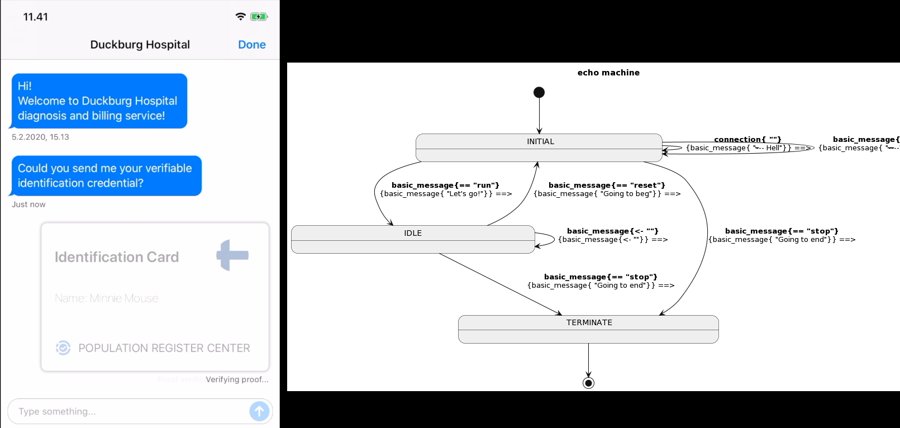

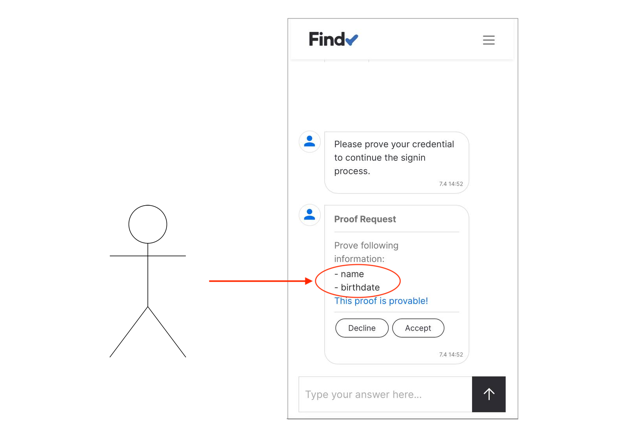

Issuing Chatbot

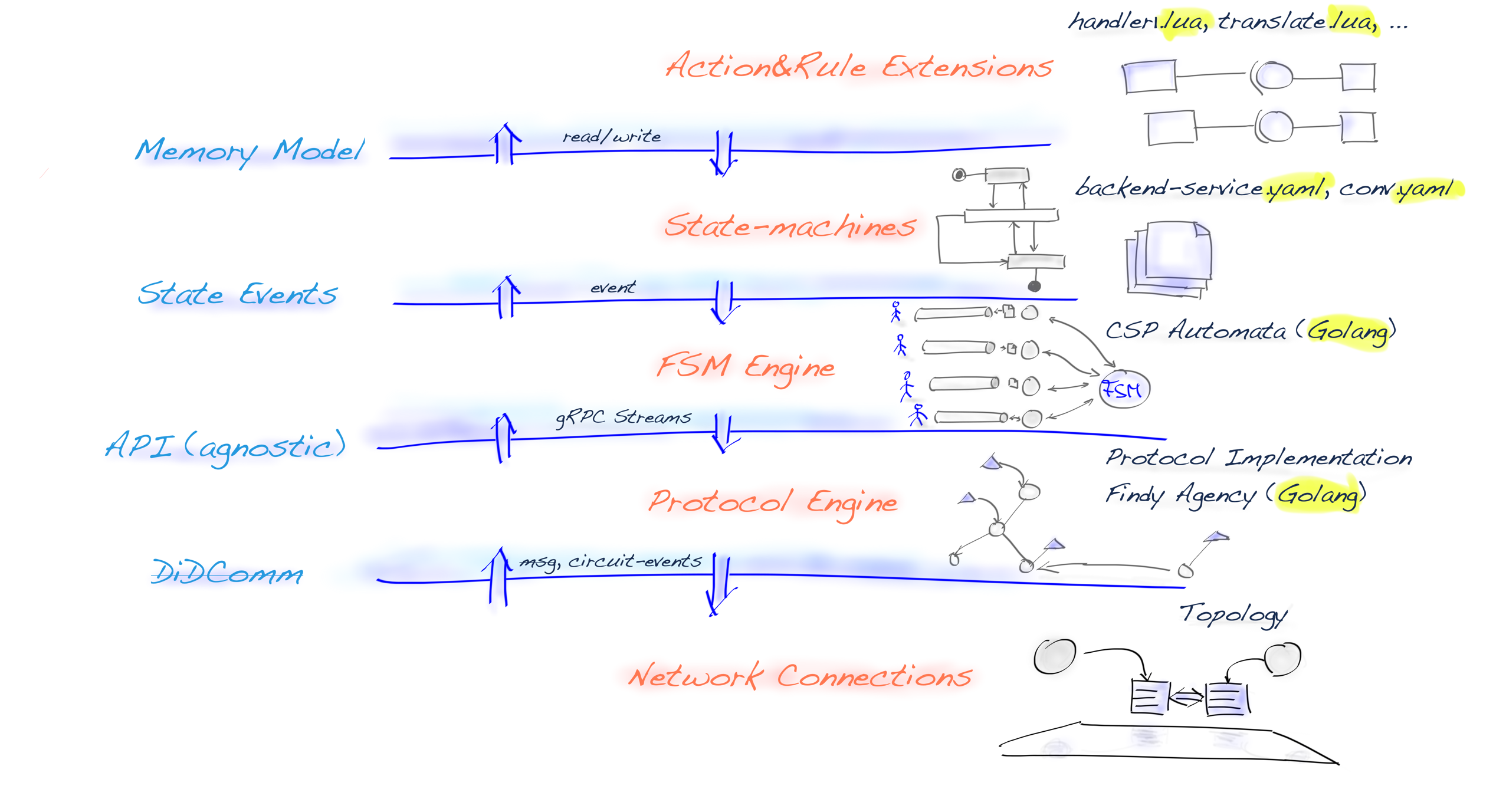

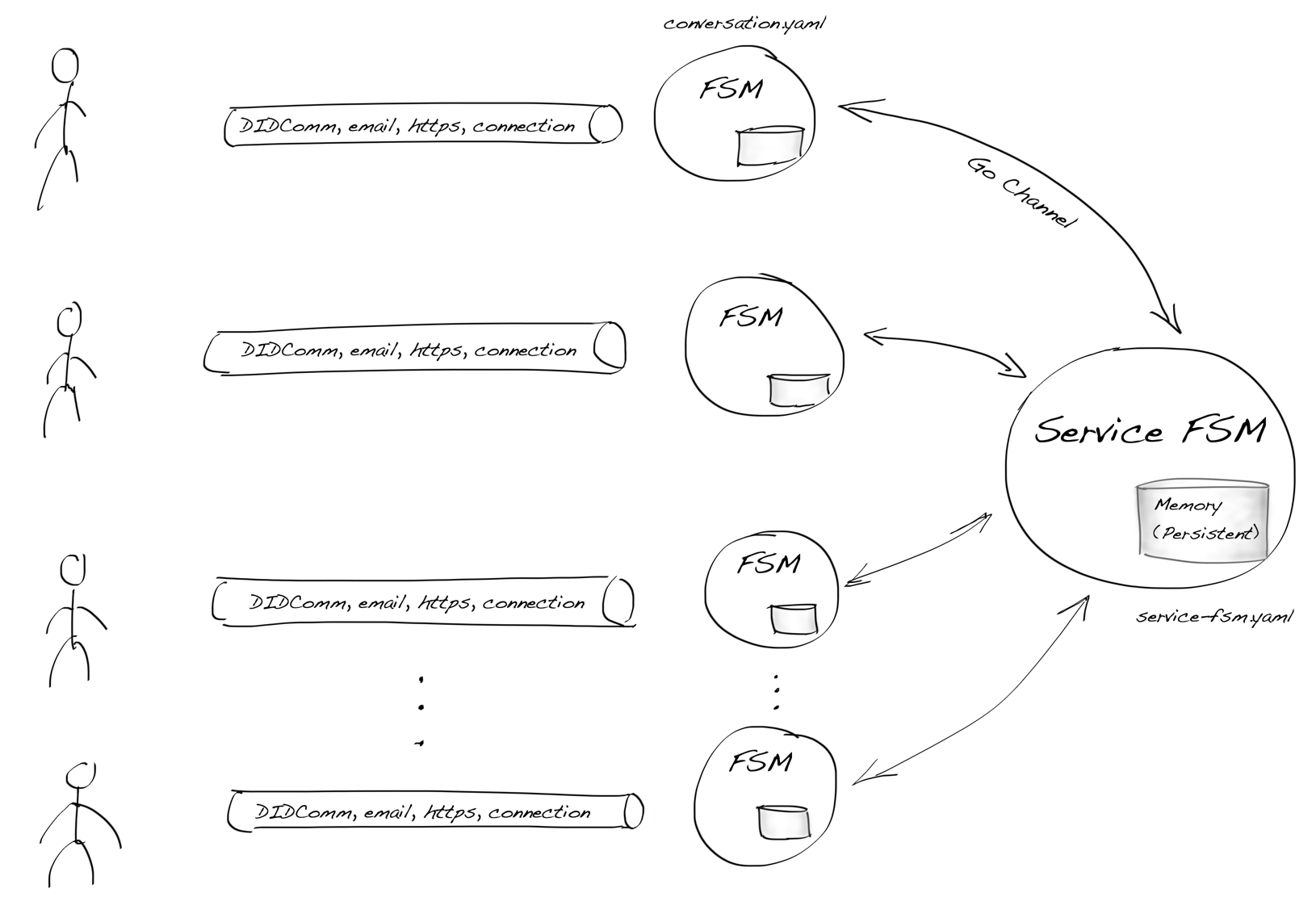

issuer, holder, and verifier. However, the base-technology (indylib) haven’t been built for symmetry. Luckily we have our own protocol engine and the tools around it allow us to build SSI services as chatbots. We have implemented our own state machine engine to develop and run these chatbot machines.This post is a development story (or hands-on workshop) of how we can use an FSM chatbot to implement SSI Services to allow any SSI/DID owner to be an issuer. Let’s start with the result and see what the state machine looks like. As you can see below, it’s simple, elegant, and, most importantly, easy to reason.

Issuing Service Chatbot

As you can see from the diagram there are two main paths. One for the issuer role

and one for the rcvr role. But the most interesting thing is that how simple the

machine is. As said, it’s very easy to reason and understand. And that’s the one of powers

these machines. The second one comes from the computational model of FSMs in

general, we could proof that they’re correct.

Note that since our original FSM engine release we have extended our model with transient state transitions or pseudostates, but our UML renderer doesn’t highlight them yet. We’ll publish an other blog post with new features of our FSM engine later.

What Problem Do We Solve?

The Hyperledger Indy-based SSI system is implemented with a CL signature scheme for ZKPs. That system needs the concept of Credential Definition stored in its creator’s wallet. The Credential Definition has an ID, which is quite similar to DID in the Indy-based AnonCreds system. The CredDefID is public. Everyone who knows it can request proof based on it or request to present a proof based on it.

But the CredDefID brings us some problems:

- How do we find a correct CredDefID when it’s needed?

- How about fully symmetric cases when everyone can simultaneously be an issuer, a holder, and a verifier? Everyone can issue credentials and receive proof of them in these use cases. For example, we have a use case where a seller (anybody in a marketplace) wants to issue a receipt for the transaction.

There are other problems, but the rest are based on the same core problem.

We’ll solve problem number 2 by using a notary-type service. We started with one service and implemented a reference chatbot to issue credentials on behalf of a logical issuer, aka seller. We also have implemented our version of a public DID. With these two, we have solved the problem quite elegantly.

Isn’t This Centralization?

In some ways, yes, but the result isn’t more centralized than the suggested trust registries for other or similar problems in the SSI field. In a certain way, this model adds self-sovereignty because now everyone can issue, and everyone can build these issuing services for their use cases.

More Reading

Before we continue, here’s a list of documents and places that are helpful when playing with these:

- Getting Started With SSI Service Agent Development

- Writing SSI Apps

- FSM Documentation Part I

- FSM Documentation Part II

- The Workshop Material For Our CLI Tool

Note that the blog post is written from self-learning material point of view. Preferably, you should read and execute the commands it guides you to do simultaneously.

Prerequisites

Note, for now, we assume that the reader uses the local setup of the agency. It makes it easier to follow the core agent’s real-time logs.

Even though you have probably cloned the repo. But if not, please do it now:

git clone https://github.com/findy-network/findy-agent-cli

Follow the material in the repo’s readme files or the workshop material

mentioned at the beginning to get your findy-agent-cli CLI tool working, i.e.,

communicating with your agency.

Helpers

- Go to repo’s root:

- Shorter name and autocompletion:

alias cli=findy-agent-cli source ./scripts/sa-compl.sh

Document for now on assumes that CLI tool is named to

cli.

Very Important

If you create new wallets directly with the CLI tool, make sure that auto-accept

mode is ON for the agent. The check it after the cli agent ping works:

cli agent mode-cmd -r

Tip, if you have problems with CLI commands check your

FCLI_prefixed envs.

The result should be AUTO_ACCEPT. Note that allocation scripts do this automatically.

Chatbots work even when auto-accept isn’t ON. They can written to make decisions to decline or acknowledge presented proofs, for example.

Setup Agents And Wallets

It would be best to have at least three wallets and their agents up and running.

Inside the findy-agent-cli repo, you have scrpits/fullstack directory. Let’s

name it a tool root for the rest of the documentation. For example:

export MY_ROOT=`pwd`

Follow the workshop documentation on how to allocate new agents with their wallets.

Allocate the following agents (actual commands and the script calls follow):

issuingwill be the issuing serviceseller, will be a seller, aka logical issuerbuyerwill be a buyer.verifierwill be a verifier for the VC. (Usage is out scoped from this document.)

Here’s an example of how you could

- allocate the agents,

- have access to FSM files by making links,

- create schema and credential definition (done in

issuing), - create DIDComm connections between

issuing,seller, andbuyerwhere the last is done in this phase just for testing the environment. During the service integration (e.g. marketplace app) to the app invitation or command to connect withis sent in its own step during the service use.

cd "$MY_ROOT"

make-play-agent.sh issuing seller buyer verifier

cd play/issuing

ln -s ../../fsm/issuing-service-f-fsm.yaml

ln -s ../../fsm/issuing-service-b-fsm.yaml

open `cli bot uml issuing-service-f-fsm.yaml` # UML rendering

source ./new-schema

source ./new-cred-def

./invitation | ../seller/connect

./invitation | ../buyer/connect

Optionally store a public DID of the Issuing Service Chatbot:

export PUB_DID=$(./pub-did print)

Note! Leave this terminal open and do not enter new commands to it yet.

Note!

source ./new-cred-definitializesFCLI_CRED_DEF_IDenvironment variable. Theissuing-service-f-fsm.yamlfile references to this variable, i.e. it’s mandatory, or you could hard-code the credential definition value to yourissuing-service-f-fsm.yaml.

Use The Issuing Service

- open 2 separated terminals

AandB(see the workshop material on how to init envs) to work as aseller, leave them to be. - open 2 separated terminals

AandB(see the workshop material on how to init envs) to work as abuyer, leave them to be. - go back to the previous

issuingterminal and start the chatbot:cli bot start --service-fsm issuing-service-b-fsm.yaml issuing-service-f-fsm.yaml -v=1 - go back to the

sellerterminalAand enter a command:cli bot read. This is a read-only terminal window for the chatbot’s responses. - go back to the

sellerterminalBand entercli bot chat. This is a write-only terminal window to send chat messages.- (optional: enter ‘help’ to get used to what’s available)

- enter your session ID, select something easy like ‘SID_1’

- enter the text ‘issuer’ that’s our current

role - enter your attributes data value for credential, select something easy to remember during verfication

- go back to the

buyerterminalAand entercli bot read. This is a read-only terminal window for the chatbot’s responses. - go back to the

buyerterminalBand entercli bot chat. This is a write-only terminal window to send chat messages.- (optional: enter ‘help’ to get some reminders)

- enter your previous session ID, it was something easy like ‘SID_1’

- enter the text ‘rcvr’, it’s your

rolenow

- see the

Buyer’sAterminal (cli bot readcommand running); the results should be that the credential is issued for theBuyer. - go to both

Bterminals and enter some text to move FSM instances to thestart-againstate. - it’s optional; you could rerun it with the same players.

Tip, when you started the Issuing Service Chatbot with

-v=1you could monitor it’s state transitions in real-time.

The Sequence Diagram

Notes about the current implementation:

- only one attribute value schema is implemented. Start with that and add cases where more attributes can be entered later. (Homework)

- every message sends a

basic_messagereply, which usually starts withACKstring. See theYAMLfile for more information. The reply messages aren’t drawn to the sequence diagram below to keep it as simple as possible. - you can render state machines to UML:We have UML rendered state machine diagram in the beginning of this post.

open `cli bot uml issuing-service-f-fsm.yaml` # give correct FSM file

sequenceDiagram

autonumber

participant Seller

%% -- box won't work on hugo, or when this machine is running it --

%% box Issuing Service

participant IssuerFSM

participant BackendFSM

participant RcvrFSM

%% end

participant Buyer

Seller -) IssuerFSM: 'session_id' (GUID)

Seller -) IssuerFSM: issuer = role

loop Schemas attributes

Seller -) IssuerFSM: 'attribute_value'

end

alt Send thru existing connection

Seller -) Buyer: 'session_id' (same as above, design how app knows that this is a command)

end

Buyer -) RcvrFSM: 'session_id'

Buyer -) RcvrFSM: rcvr = role

RcvrFSM -) BackendFSM: receiver_arriwed

BackendFSM -) IssuerFSM: rcvr_arriwed

loop Schemas attributes

IssuerFSM -) BackendFSM: 'attribute_value'

BackendFSM -) RcvrFSM: 'attribute_value'

end

IssuerFSM -) BackendFSM: attributes done (not implemented, one attrib)

BackendFSM -) RcvrFSM: attributes done (not implemented, one attrib)

RcvrFSM -) Buyer: CREDENTIAL ISSUING PROTOCOLPre-steps (not in the diagram)

- We can generate a public DID for the Issuing Service Chatbot.

cd play/issuing # or where your bot is export PUB_DID=$(./pub-did print) - Save this

PUB_DIDto your app’s configuration. It’s where Issuing Service Chatbot can be found when needed. Note,PUB_DIDis a URL which returns a new invitation on every load. You can treat is as a URL template:

You can enter your case specific data like ad number to thehttp://localhost:8080/dyn?did=8NCqkhnNjeTwMmK68DHDPx&label=<you_ad_number>labelarg.

Steps

- Actual Seller role or app implementation for the role generates a sessionID

(GUID) and sends it to Issuing Service Chatbot as a

basic_message. - The Seller role is a logical issuer, so it sends the

issuerstring as abasic_messageto the Issuer FSM instance. - The Seller role sends a <attr_val> (case specific in your schema) as a

basic_message. - The Seller role sends the same sessionID directly to the buyer role. The

communication channel can be their existing DIDComm connection or something

else, but the buyer needs to know how to react to that line if it’s a

basic_message. - The Buyer role or app implementation for the role sends the received

sessionIDto the chatbot, i.e., joins the same session. - The Buyer role sends the

rcvrword to the chatbot to make explicit role selection. (We could leave this out in some version of FSM implementation and rely only on the order of the messages, but this allows us to understand better and keep things open for future extensions.) - The Rcvr FSM instance has now got the actual credential holder

(Buyer/Receiver) and it sends a

receiver_arriwedstring to the Backend FSM. - The Backend FSM sends a

rcvr_arriwedto the Issuer FSM as abasic_message. - Now the Issuer FSM loops thru all previously received (from Seller) attribute values and sends them to the Backend FSM.

- The Backend FSM sends the attribute values to the Rcvr FSM as a

basic_messages - Optional for the future: if there would be more attributes than one, this would be the place to send the information that all attributes are sent to the Backend FSM. Another way to implement these state machines would be to add information to both Issuing and Receiving FSMs how many attributes there are, and receiving states would be declared to rely on that knowledge.

- Optional: see the previous step. The Backend FSM works as a forwarder for all of the cases where the issuing and the receiving FSM instances need to communicate with each other through the chatbot service.

- Finally the RcvrFSM executes credential issuing protocol.

Conclusion

You have been blown away by how easy it is to implement these FSM-based chatbots, haven’t you? The Issuing Service is only one example of the potential of the FSM chatbots. We are excited to see what else you end up building. When doing so, please send your questions, comments, and feedback to our team. Let’s make this better—together.

I want mDL!

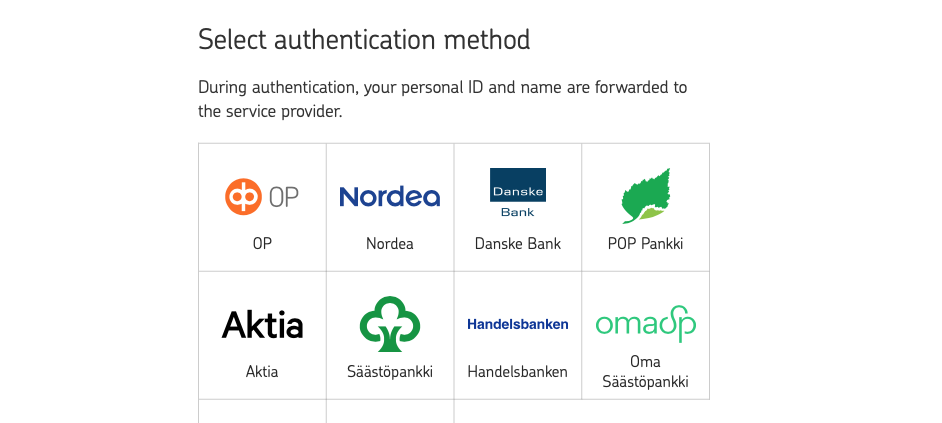

It’s funny that we don’t have a mobile driver’s license in Finland. In the Nordics, we are usually good with digital and mobile services. For example, we have had somewhat famous bank IDs from the early 90s.

For the record, Iceland has had a mobile driver’s license since 2020. Surprise, Finland was in the pole position in the summer of 2018. The government-funded mobile driver’s license app (beta) was used with 40.000 users. The project started in 2017 but was canceled in 2020 (link in Finnish).

How the heck have we ended up in this mess? We should be the number one in the world! Did we try to swallow too big a bite once when the country-level SSI studies started?

SSI Study

As you probably already know, our innovation team has studied SSI for a long time. We have started to understand different strategies you can follow to implement digital identity and services around it.

Trust-foundation

Christopher Allen, one of the influencers in the field of SSI, divided the Self-Sovereign identity into two primary tracks:

- LESS (Legally-Enabled Self-Sovereign) Identity

- Trustless Identity, or more precisely Trust Minimized Identity

These two aren’t mutually exclusive but give us a platform to state our goals. Which one do we prefer, a government or an individual?

| LESS Identity | Trust Minimized Identity |

|---|---|

| Minimum Disclosure | Anonymity |

| Full Control | Web of Trust |

| Necessary Proofs | Censorship Resistance |

| Legally-Enabled | Defend Human Rights vs Powerful Actors (nation-states, corps, etc.) |

The above table is from the Allen’s talk in YouTube.

I personally prefer Human Rights over Legally-Enabled.

However, from a researcher’s point of view, the LESS Identity track seems faster because it’s easier to find business cases. These business-driven use cases will pave the way to even more progress in censorship resistance, anonymity, etc. The mobile driver’s license is a perfect example of a LESS Identity practice. Let’s follow that for a moment, shall we?

Level Of Decentralization

Most internet protocols have started quite a high level of decentralization as their goal/vision through the history of computer science. There are many benefits to setting decentralization as a requirement: no single point of failure, easier to scale horizontally, etc.

Since blockchain, decentralization has become a hype word, and most of us need help understanding what it means to have fully decentralized systems. One easily forgotten is trust, and we will only achieve absolute decentralization once we have a proper model for Self-Certification.

Idealism vs Pragmatism

I see a paradox here. How about you? Why does anyone try to build maximally decentralized systems if their identities must be legally binding? Or why do we put a lot of effort into figuring out consensus protocols for systems that don’t need them?

Our legal system has solved all of these problems already. So, let’s stick on that be pragmatic only, shall we?

Pragmatism

My current conclusion is the old wisdom: don’t build a platform immediately, but solve a few use cases first and build the platform if needed.

Second might be don’t solve imaginary problems. Look for monetizable pain first and solve that with as small steps as possible.

Let’s see what that all means from SSI vs. mDL.

Example of Good UX

Apple Pay is the world’s largest mobile payment platform outside China. It’s been exciting to follow what happened in the Nordics, which already had several mobile payment platforms and the world’s most digitalized private banking systems when Apple Pay arrived.

Why has Apple Pay been so successful? Like many other features in Apple’s ecosystem, they took the necessary final technical steps to remove all the friction from setting up the payment trail. Significantly, the seller doesn’t need additional steps or agreements to support Apple Pay in the brick-and-mortar business. (That’s how it works in Finland.) That’s the way we all should think of technology.

Use Case -driven approach

The origins of SSI have been idealistic in some areas. The ISO mDL is the total opposite. Every single piece of idealism has been thrown away. Every design decision is hammered down to solve core use cases related to the use of mobile driver’s licenses. And no new technologies have been invented. Just put together features that we need.

I had to admit that it’s been refreshing to see that angle in practice after ivory towers of SSI ;-) For the record, there is still excellent work going on in the SSI area in general.

Differences Between mDL And SSI

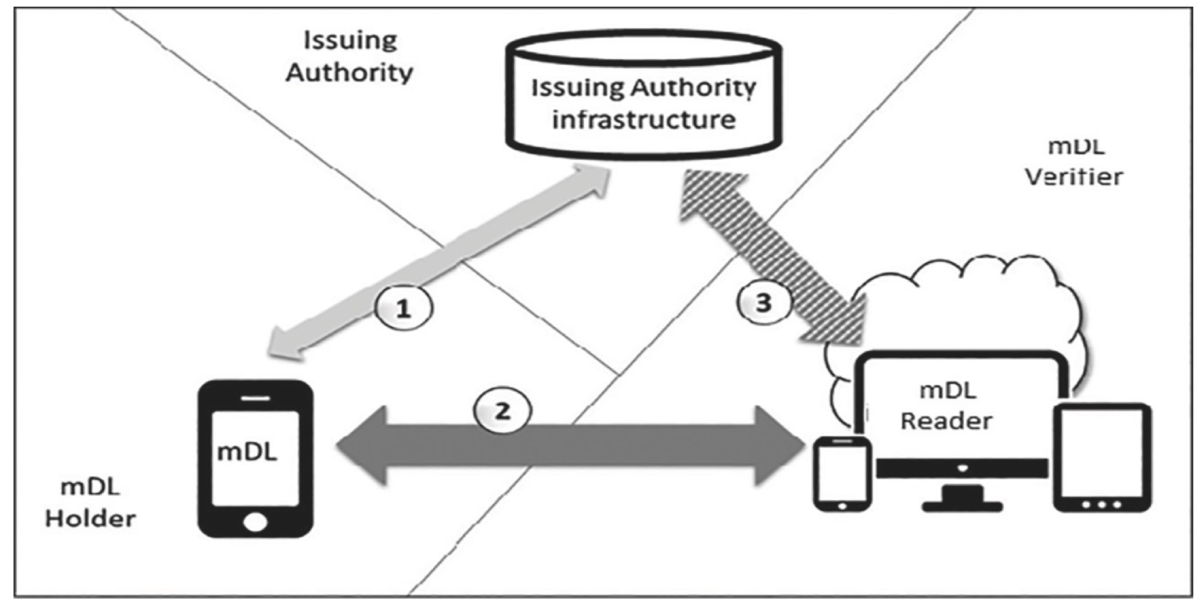

mDL has almost a similar trust triangle as good old SSI-version.

mDL Interfaces And Roles — ISO ISO_18013-5

But when you start to look more carefully, you’ll notice some differences, like the names of similar parties.

Concepts

ISO calls these roles as interfaces.

| ISO 18013-5 Interface Party | DID/SSI Concept |

|---|---|

| Issuing Authority Infrastructure | Issuer |

| mDL Reader | verifier |

| mDL | Holder |

Connections

Also, connections between parties are different. Where SSI doesn’t allow direct communication between a verifier and an issuer, mDL explains that their communication is OK but optional. The only thing that matters is that the mDL Holder and mDL Reader can do what they need to execute the current use case. For example:

For offline retrieval, there is no requirement for any device involved in the transaction to be connected

‘Connected’ means connected to the internet. One of the scenarios is to support offline use cases, which makes sense if you think about instances where law enforcement officer needs to check the ID. That must be possible even when the internet connection is severed.

We continue with transport technologies at Mobile Driver’s License.

Revocations

We should also ask when that call-home is needed. The first and most obvious one is the validity checks. If the use case demands that the relying party checks that the mobile document (mDOC) is still valid on every use, a verifier can contact the issuer (Issuing Authority) and ask. All of this sounds pragmatic.

Call-home seems perfectly aligned with Finnish bank regulation and legislation, as far as I know. For example, the party who executes, let’s say, a transaction according to power-of-attorney (PoA) is the party who’s responsible for checking that a PoA is valid and not revoked. The responsibility to transfer revocation information is not the one who has given the PoA. It’s enough that the information is available for the party who relies on the PoA. It’s the relying party’s responsibility to access that information case-by-case.

It makes much sense and makes building revocation easier when you think about that. In summary, some call-home is the only way to make revocation requirements work. Note that the home isn’t necessarily the issuer, but it definitely can be seen as the Issuing Authority’s Infrastructure.

One schema

The most specific difference between mDL and SSI is that the schema is locked. It’s based on (mDOC) standard. That might first feel like a handicap, but the more you think about this, the better way it is to start implementing use cases in this area.

Mobile Driver’s License

mDL standard also has similarities to SSI, such as selective disclosure. But it and other features are designed with only one thing in mind: pragmatism. No Fancy Pancy features or saving-the-world idealism, just pure functionality.

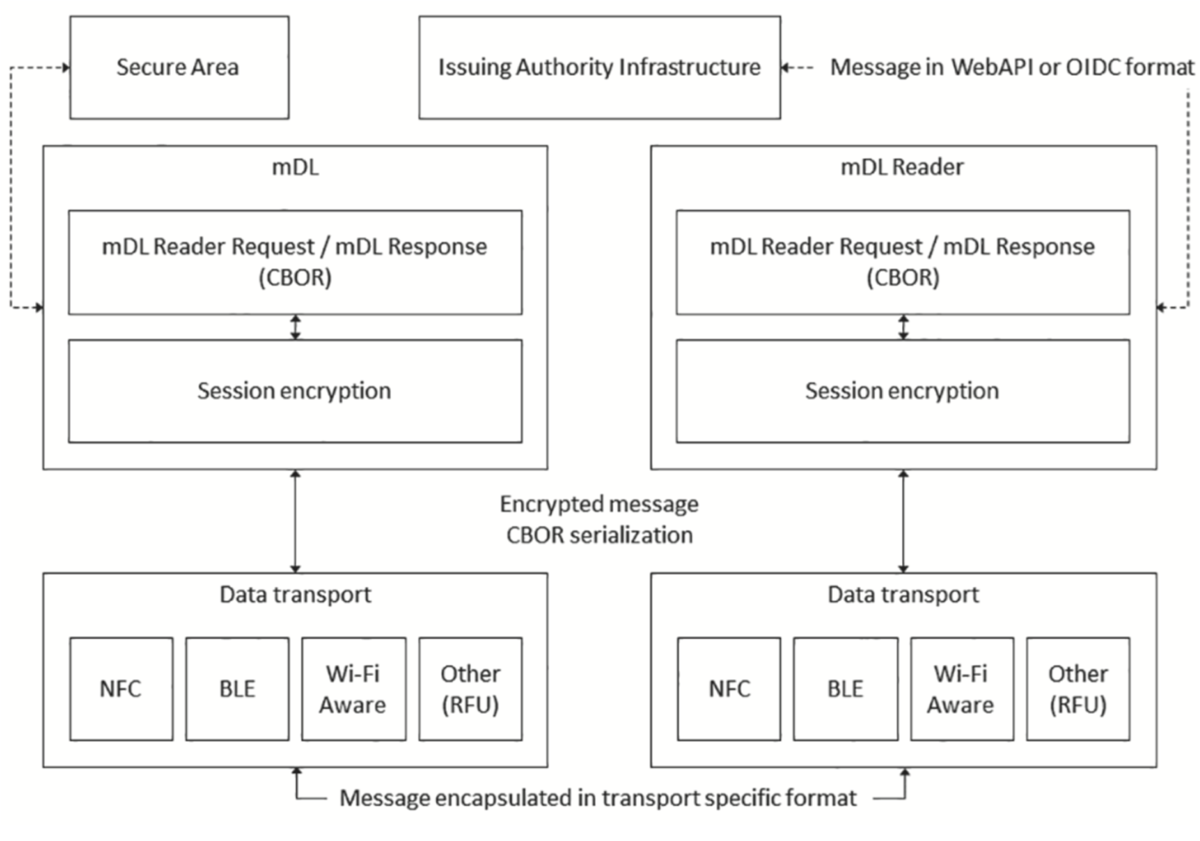

The ISO standard defines the mDL standard, which is based on mDOC. The following diagram describes its most important architectural elements.

mDL ISO Architecture — ISO ISO_18013-5

The diagram presents both logical and physical parts of the mDL architecture. At the bottom are supported data transport technologies: NFC/Bluetooth, Wi-Fi Aware (optional), etc. Transported data is CBOR coded, which guarantees the best possible performance. CBOR is a binary-coded data format optimized for limited resources and bandwidth.

Selective Disclosure

mDL’s selective disclosure is analog to SD-JWT’s mechanism, i.e., only disclosures’ digests are used when the issuer signs the credential document. That allows simple and easy-to-understand implementation, which is also efficient. At first glance, it only supports property-value pairs, but I don’t see why it couldn’t allow the use of hierarchical data structures as well. However, because the digest list is a one-dimensional array, it would prevent selection from inside a hierarchy.

No Need For ZKP

mDL doesn’t have ZKP but

has solved similar use case requirements with the attestations. For example,

the mDL issuer will include a set of age-over attestations into the mDL. The

format of each attestation identifier is age_over_NN, where NN is from 00 to

99.

When mDL Reader sends the request, it can, for example, query the presence of the

attestation age_over_55, and the response will include all the attestations

that are equal to or greater than 55 years old. For example, if mDL doesn’t have

age_over_55 but it has age_over_58 and age_over_65, it will send

age_over_58.

Conclusion

mDL specification is excellent and ready for broad adoption. I hope we can build something over it ASAP. Unfortunately, the road I selected for PoCs and demos wasn’t possible because Apple’s ID Wallet requires that your device is registered in the US. There are ways to try it on an emulator, but it lacks too many core features to be interesting enough. Suppose you are asking why Apple and why not something else; the answer is that I’m looking at this on the operation system (OS) wallet track. Apple also has exciting features like Tap to ID.

The next step will be to study what we can learn from mDOC/mDL from the DID point of view. Is there some common ground between how mDL sees the world and how DIDComm and generic SSI sees the world—hopefully, the same world.

Until next time, see you!

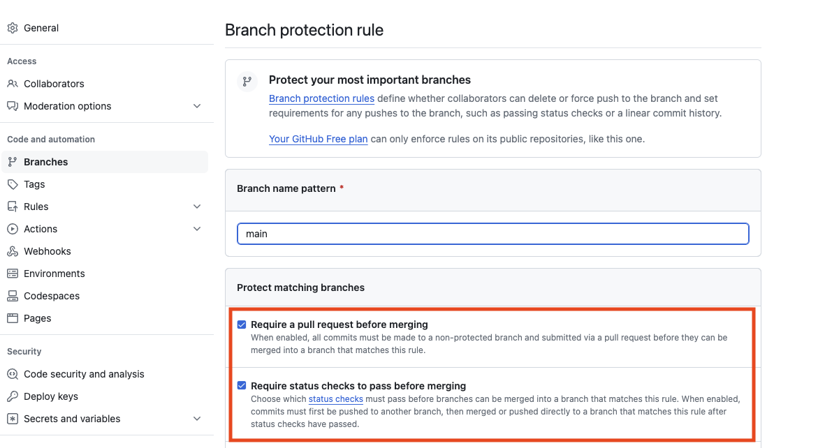

Managing GitHub Branch Protections

GitHub has recently improved its automatic pull request handling and branch protection features. I have found these features handy, and nowadays, I enable them regardless of the project type to help me automate the project workflows and protect the code from accidental changes and regression bugs.

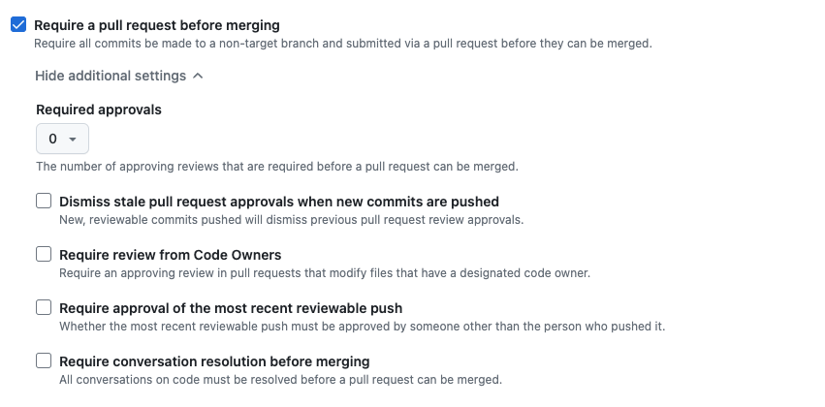

The features I find most useful are

- Require a pull request before merging: This setting enforces a model where the developers cannot accidentally push changes to the project’s main branches.

- Require status checks to pass before merging: This branch protection setting allows one to configure which status checks (e.g., unit tests, linting, etc.) must pass before merging the pull request.

- Allow auto-merge: This setting allows me to automate the merging of the PR once all the CI has run all the needed checks. I do not need to wait for the CI to complete the jobs. Instead, I can continue working on other things. In addition, I use this feature to merge, for example, dependency updates automatically. Note: auto-merging works naturally only when the branch protection checks are in place.

Until now, I have used GitHub’s branch protection feature to enable these additional shields. With this settings page, you can easily protect one or multiple branches by configuring the abovementioned options.

One can configure branch protection rules in the repository settings.

Branch Protection Prevents Releasing

However, when enforcing the branch protection, it applies to all users. That also includes the bot user I am using in many projects to release: creating a release tag, updating version numbers, and committing these changes to the main branch.

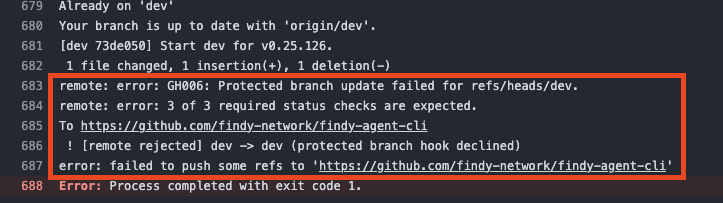

Releaser bot is unable to push version update when branch protection is enabled.

Suddenly, when the branch protection is enabled,

the release bot cannot do its duties as it cannot push to the protected branch.

Error states: Protected branch update failed for refs/heads/<branch_name>, X of X required status checks are expected.

Therefore, to overcome this problem, I have configured the bot to use pull requests. These workaround pull requests have slowed the process and made it unreliable. In some cases, I have been using a user token with administrative permissions to make the releases, which I want to avoid as it has evident problems in the security model.

Rulesets to the Rescue

Finally, this week, I reserved some time to investigate whether it is possible to avoid these limitations. I had two targets: first, I wanted to protect the main branch from accidental pushes so developers could make changes only via pull requests vetted by the CI checks. Second, I wanted the release bot to be able to bypass these rules and push the tags and version changes to the main branch without issues.

I googled for an answer for a fair amount of time. It soon became apparent that many others were struggling with the same problem, but also that GitHub had released a new feature called rulesets, intended to solve the problem. However, a few examples were available, and the configuration of the rulesets was not intuitive. Therefore, I have documented the steps below if you wish to use a similar approach in your project.

The instructions below are three-phased:

- Creating a GitHub application for the release process operations

- Configuring rulesets that protect the main branch but still allow releasing

- Using the newly created GitHub application in the GitHub Actions workflow

GitHub Application

The first step is to create a GitHub application that handles the git operations in the CI release process for you.

Why to Use an Application?

There are multiple reasons why I chose to make a dedicated GitHub application instead of using a personal access token or built-in GitHub Actions token directly:

- The App installed in an organization is not attached to the user’s role or resource access as opposed to the personal access tokens.

- App does not reserve a seat from the organization. Creating an actual new GitHub user would reserve a seat.

- One can grant an application special permissions in rulesets. We want to treat all other (human) users similarly and only grant the bot user special access. This approach is impossible when using personal access tokens or built-in tokens.

- We want to activate other actions from pushes done by the releaser. For instance, if we create a tag with the releaser bot, we want the new tag to trigger several other actions, e.g., the building and packaging the project binary. If using a built-in GitHub Actions token, new workflows would not be triggered, as workflows are not allowed to trigger other workflows.

One can use GitHub Applications for multiple and more powerful purposes, but the releaser bot only needs minimal configuration as its only duty is to do the releasing-related chores.

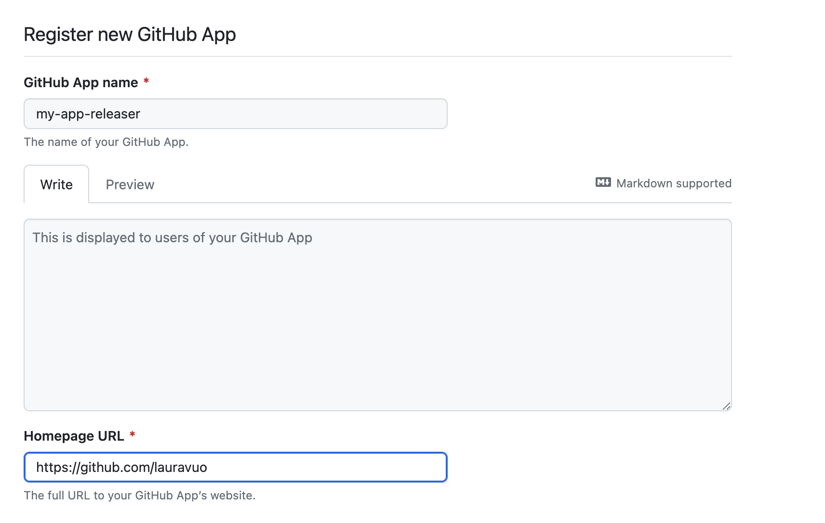

1. Register Application

Start the application registration via user profile Developer settings or

this link.

Registering new GitHub application.

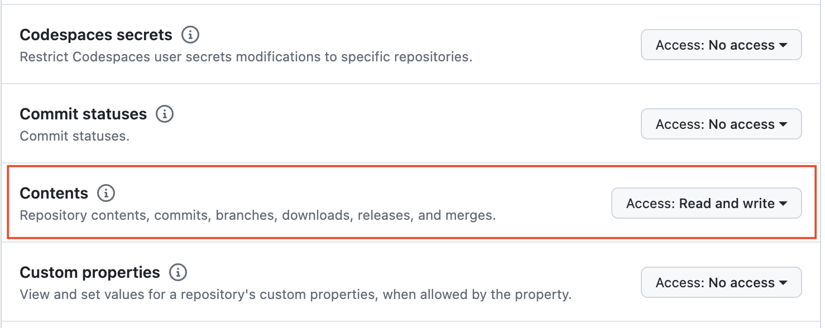

When creating the new application for the releasing functionality, the following settings need to be defined:

- Application name: e.g.

releaser-bot - Homepage URL: e.g. the repository URL

- Untick

Webhook/Active, as we don’t need webhook notifications. - Choose permissions:

Permissions/Repository/Contents/Read and write.

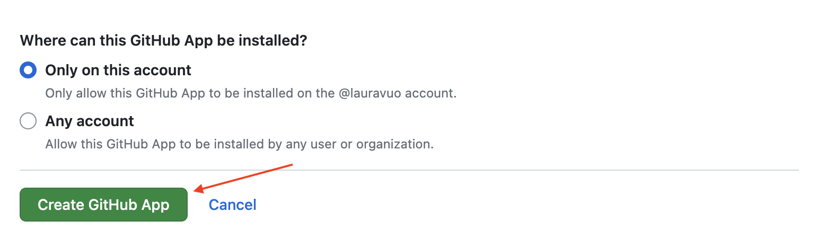

- Choose selection:

Where can this GitHub App be installed?Note: If you want to use the application in an organization’s repositories, make it public. - Push

Create GitHub App.

2. Download Private Key

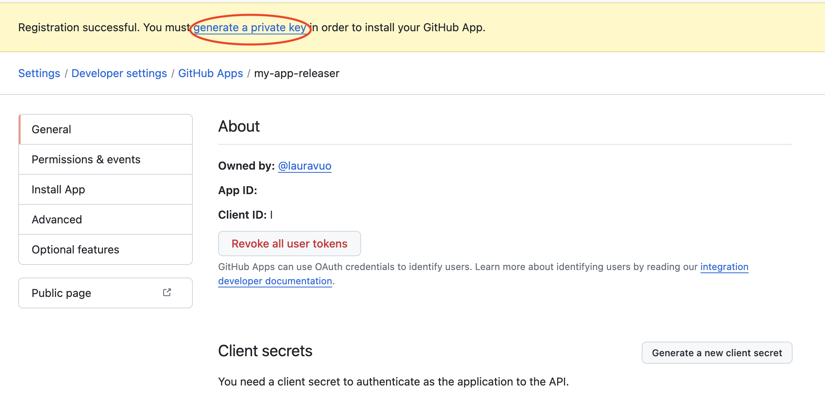

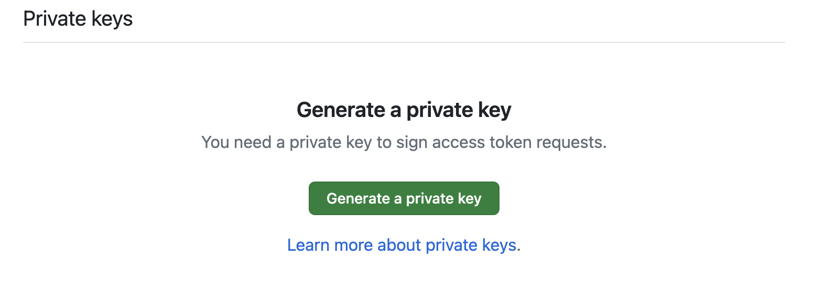

After creating the app, you will receive a note saying,

Registration successful. You must generate a private key to install your GitHub App.

Navigate to the private keys section and push the Generate a private key button.

The private key file will download to your computer. Store it in a secure place; you will need it later.

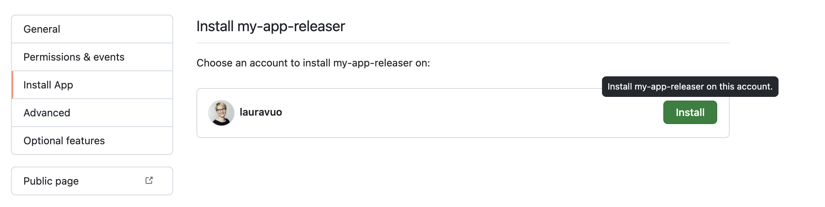

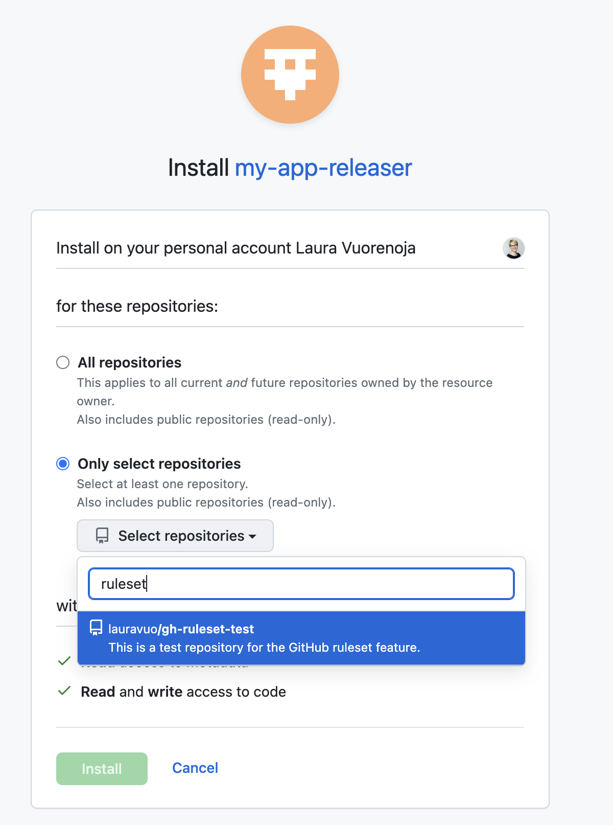

3. Install the Application

Before using the application in your repository’s workflow:

- Install the app in the target repository.

In the created application settings, go to the

Install Appsection. - Select the user or organization for which you want to install the application.

- Select if you wish to use the application in a single repository or all account repositories.

- Push the

Installbutton.

Remove Existing Branch Protections

The rulesets feature will work on behalf of the branch protection settings. To avoid having overlapping configurations, remove first any existing branch protections.

Rulesets

The next step is to create the rulesets.

I crafted the following approach according to the original idea presented in the GitHub blog. The goal is to protect the main branch so that:

- Developers can make changes only via pull requests that have passed the status check

test. - The releaser bot can push tags and update versions in the GitHub Actions workflow directly to the main branch without creating pull requests.

You may modify the settings according to your needs. For instance, you may require additional status checks or require a review of the PR before one can merge it into the main branch.

Configuration

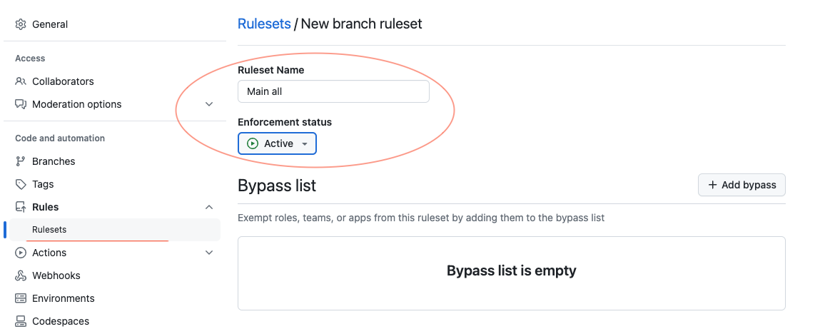

First, we will create a rule for all users. We do not allow anyone to delete refs or force push changes.

Go to the repository settings and select Rulesets:

- Create a

New rulesetby tapping theNew branch ruleset. - Give the

Main: allname for the ruleset. - Set

Enforcement statusasActive.

- Leave

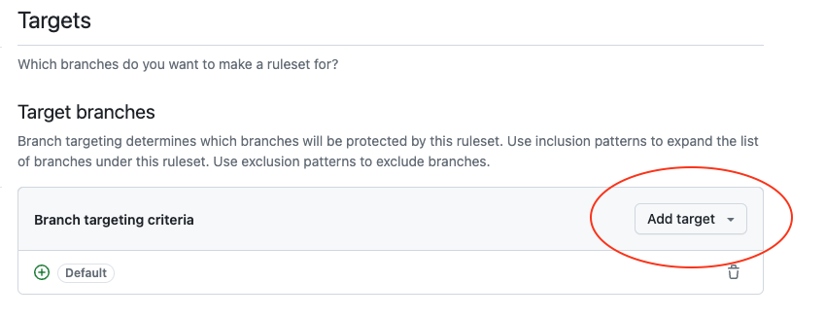

Bypass listempty. - Add a new target branch.

Include default branch(assuming the repository default branch is main).

- In

Rulessection, tickRestrict deletionsandBlock force pushes.

- Push the

Createbutton.

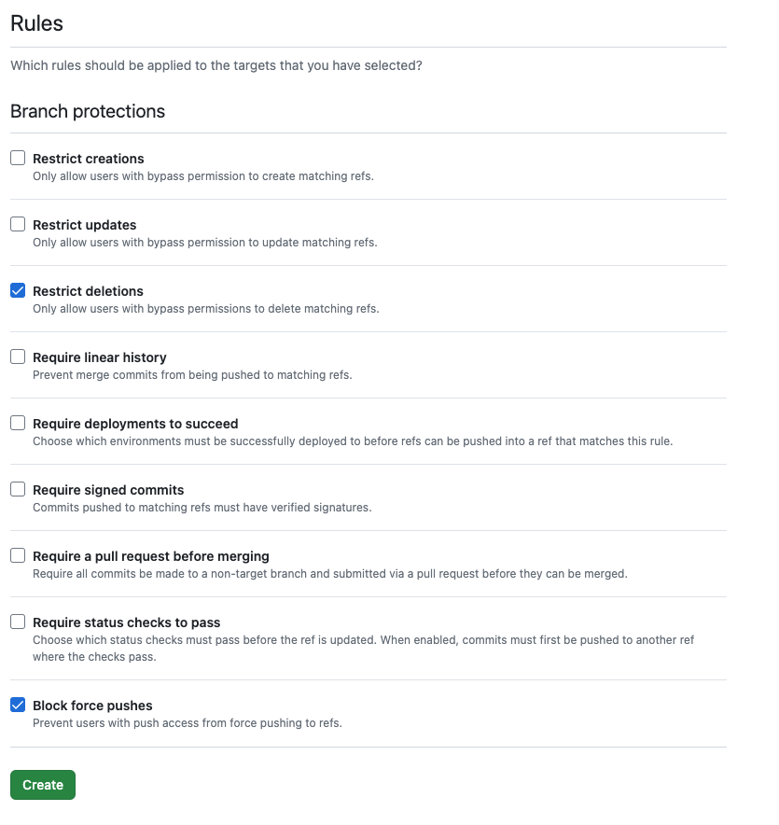

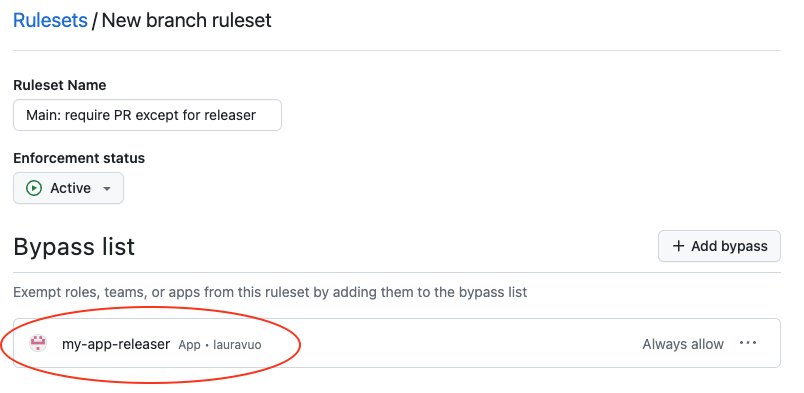

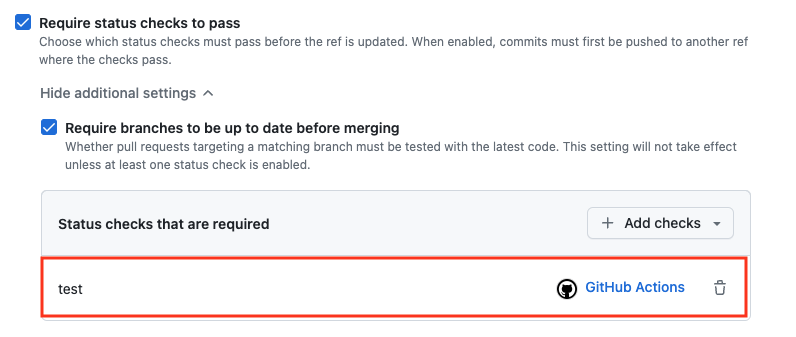

Then, we will create another ruleset that requires PRs and status checks for any user other than the releaser bot.

- Create a

New rulesetby tapping theNew branch ruleset. - Give the

Main: require PR except for releasername for the ruleset. - Set

Enforcementstatus asActive. - Add your releaser application to the

Bypass list.

- Add a new target branch.

Include default branch(assuming the repository default branch is main). - Tick

Require a pull request before merging.

- Tick

Require status checks to passandRequire branches to be up to date before merging.Addtestas a required status check.

- Push the

Createbutton.

Use Bot in GitHub Actions Workflow

The final step is configuring the release process to use our newly created GitHub application.

Add Secrets for Release Workflow

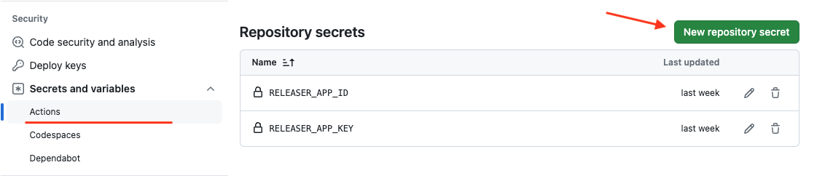

To create a token for the releaser bot in the GitHub Actions workflow, we must have two secret variables available.

Go to repository Settings / Secrets and variables / Actions. Create two new secrets:

RELEASER_APP_ID: Copy and paste your GitHub application ID (app ID) from the GitHub application settings page.RELEASER_APP_KEY: Copy and paste the contents of the private key file downloaded earlier.

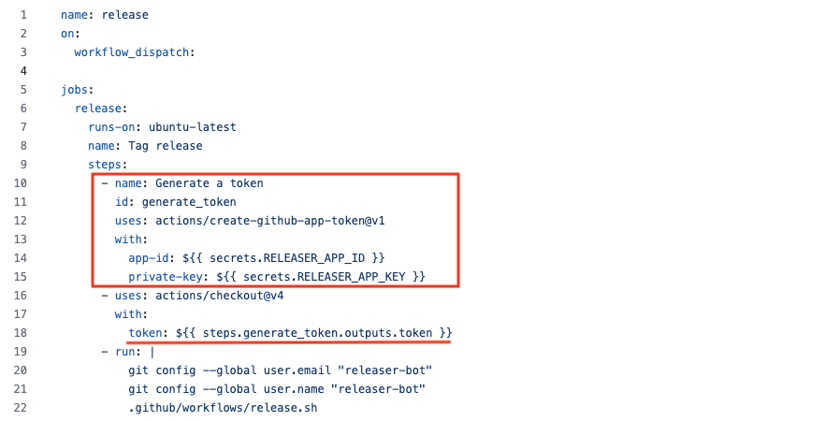

Generate Token with the GitHub Application

In the release workflow, generate a token with the GitHub Application.

For this, you can use a special action, actions/create-github-app-token,

that utilizes the secrets defined in the previous step.

You can use the generated token for the repository cloning step. One can access the token using the outputs of the token generation step. Since the repository gets cloned with the bot token, the bot user will perform subsequent git actions.

You can find the sample workflow in GitHub.

So, the release script can now push directly to the main branch as we use the releaser bot token and have configured a bypass rule for the bot user in the rulesets. At the same time, other users cannot push to the main branch but must create a PR for their changes.

Summary

That’s it! The above steps show how to automate project release workflow in GitHub Actions with a dedicated bot user while still having repository branch protections to shield from accidental changes and unvetted code. I hope the instructions are of use to you. I’m always happy to have comments and ideas for improvement; you can contact me on LinkedIn!

Path to Passwordless

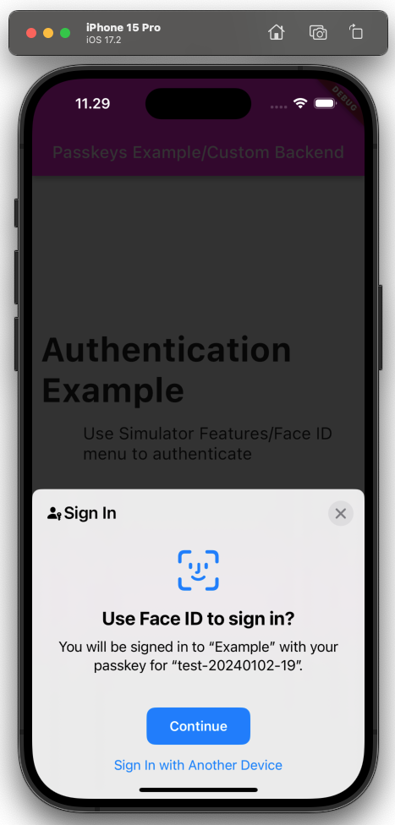

Passkeys and security keys have gained more and more popularity lately, and no wonder – they provide much more security and usability than passwords. These authentication methods utilize strong and phishing-resistant public key credentials that the keys or authenticators, as we call them, can create automatically. However, implementing passwordless support in your web application, or even replacing passwords altogether, might initially seem overwhelming.

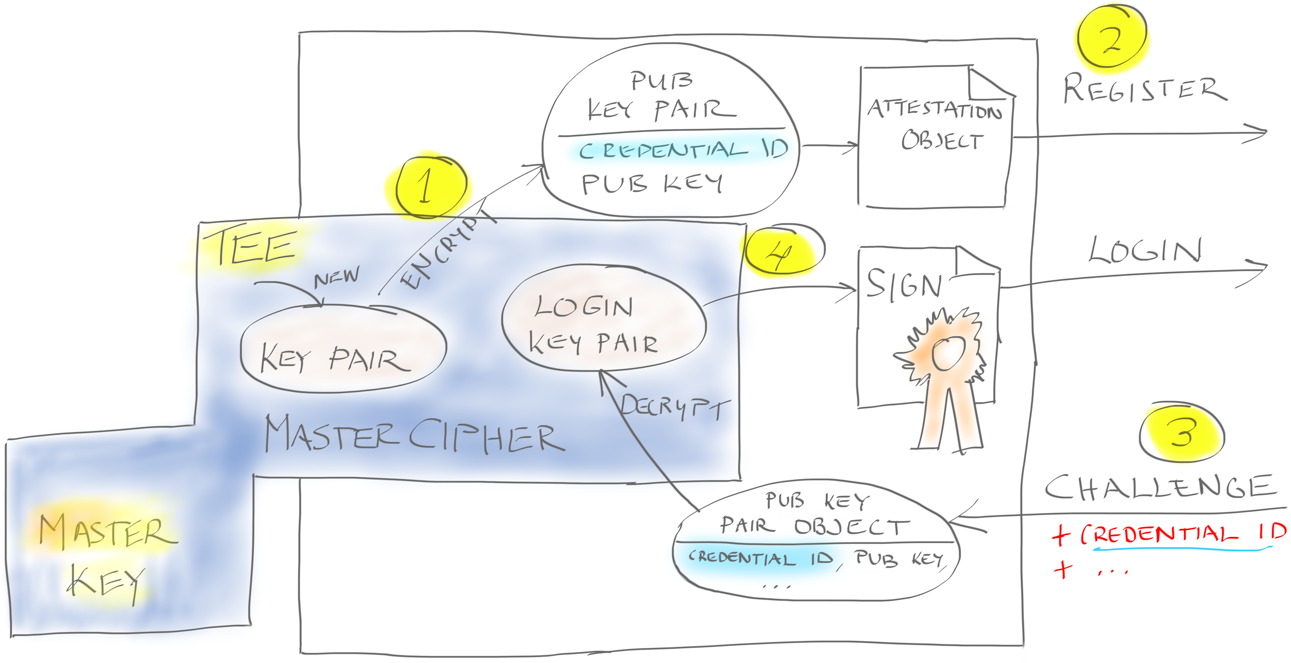

FIDO2 and WebAuthn Standards Ease the Job

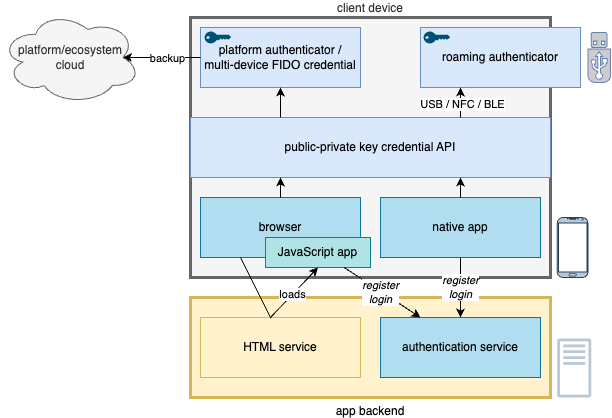

Integrating the authenticators into your web application happens through common standards created by the FIDO alliance. It is good news for us application developers: we don’t have to care about the dirty details of each authenticator implementation. Our job is to take into use the platform or browser APIs that enable the creation and use of the authenticator-managed credential. The operating system or browser handles needed user interaction and shows standard dialogs when appropriate.

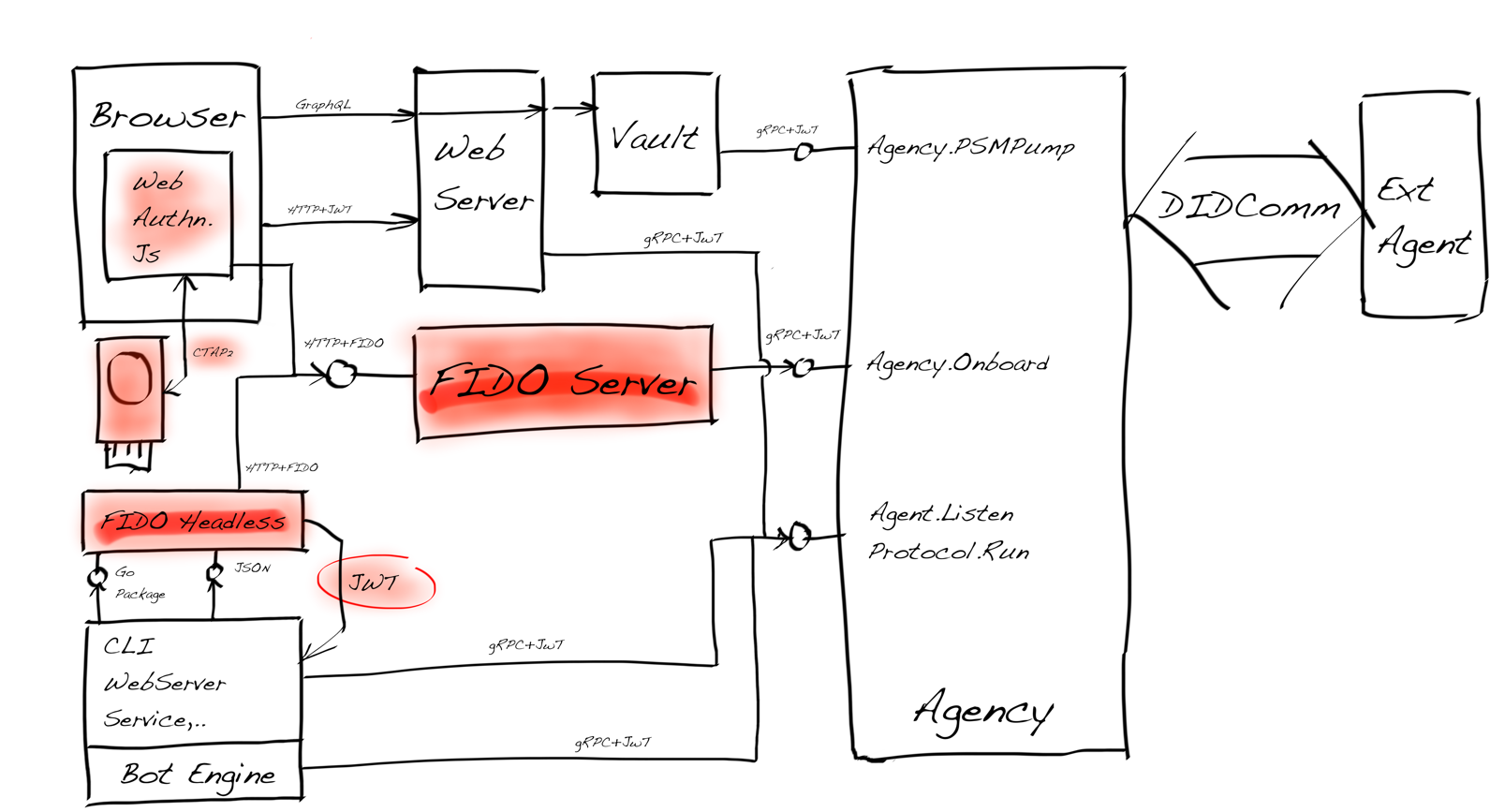

The team implements passwordless support by integrating credential handling into the client application and the backend authentication service. Client applications can use the platform capabilities through the native APIs or browser JavaScript implementation. The backend service must support at least credential registration and credential-based user authentication.

Furthermore, we need to have a backend service in place that is capable of storing and verifying these credentials so that the authentication can take place correctly. The service must support the W3C WebAuthn standard so that the backend API responses are compatible with the client-side authenticator logic. The backend service is called quite often as a FIDO2 server.

To Buy or To Build?